Copyright Ars Technica

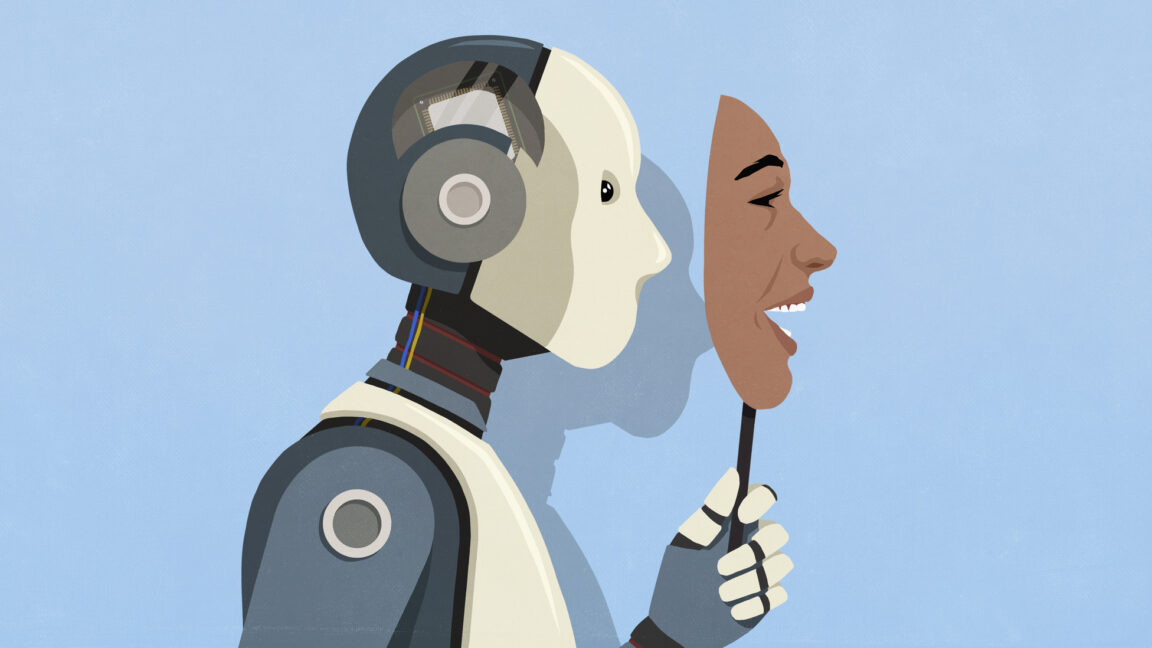

With a child in college and a spouse who’s a professor, I have front-row access to the unfolding debacle that is “higher education in the age of AI.” These days, students routinely submit even “personal reflection” papers that are AI generated. (And routinely appear surprised when caught.) Read a paper longer than 10 pages? Not likely—even at elite schools. Toss that sucker into an AI tool and read a quick summary instead. It’s more efficient! So the University of Illinois story that has been running around social media for the last week (and which then bubbled up into The New York Times yesterday) caught my attention as an almost perfect encapsulation of the current higher ed experience… and how frustrating it can be for everyone involved. Data Science Discovery is an introductory course taught by statistics prof Karle Flanagan and the gloriously named computer scientist Wade Fagen-Ulmschneider, whose website features a logo that says, “Keep Nerding Out.” Attendance and participation counts for a small portion of the course grade, and the profs track this with a tool called the Data Science Clicker. Students attending class each day are shown a QR code, which after being scanned takes them to a multiple-choice question that appears to vary by person. They have a limited time window to answer the question—around 90 seconds. A few weeks into this fall semester, the professors realized that far more students were answering the questions—and thus claiming to be “present”—than were actually in the lecture hall. (The course has more than 1,000 students in it, across multiple sections.) According to the Times, “The teachers said they started checking how many times students refreshed the site and the IP addresses of their devices, and began reviewing server logs.” Students were apparently being told by people from the class when the questions were going live. When the profs realized how widespread this was, they contacted the 100-ish students who seemed to be cheating. “We reached out to them with a warning, and asked them, ‘Please explain what you just did,'” said Fagen-Ulmschneider in an Instagram video discussing the situation. Apologies came back from the students, first in a trickle, then in a flood. The profs were initially moved by this acceptance of responsibility and contrition… until they realized that 80 percent of the apologies were almost identically worded and appeared to be generated by AI. So on October 17, during class, Flanagan and Fagen-Ulmschneider took their class to task, displaying a mash-up image of the apologies, each bearing the same “sincerely apologize” phrase. No disciplinary action was taken against the students, and the whole situation was treated rather lightly—but the warning was real. Stop doing this. Flanagan said that she hoped it would be a “life lesson” for the students. On a University of Illinois subreddit, students shared their own experiences of the same class and of AI use on campus. One student claimed to be a teaching assistant for the Data Science Discovery course and claimed that, in addition to not being present, many students would use AI to solve the (relatively easy) problems. AI tools will often “use functions that weren’t taught in class,” which gave the game away pretty easily. Another TA claimed that “it’s insane how pervasive AI slop is in 75% of the turned-in work,” while another student complained about being a course assistant where “students would have a 75-word paragraph due every week and it was all AI generated.” One doesn’t have to read far in these kinds of threads to find plenty of students who feel aggrieved because they were accused of AI use—but hadn’t done it. Given how poor most AI detection tools are, this is plenty plausible; and if AI detectors aren’t used, accusations often come down to a hunch. Everyone appears to be unhappy with the status quo. AI can be an amazing tool that can assist with coding, web searches, data mining, and textual summation—but I’m old enough to wonder just what the heck you’re doing at college if you don’t want to process arguments on your own (ie, think and read critically) or even to write your own “personal reflections” (ie, organize and express your deepest thoughts, memories, and feelings). Outsource these tasks often enough and you will fail to develop them. I recently wrote a book on Friedrich Nietzsche and how his madcap, aphoristic, abrasive, humorous, and provocative philosophizing can help us think better and live better in a technological age. The idea of simply reading AI “summaries” of his work—useful though this may be for some purposes—makes me sad, as the desiccated summation style of ChatGPT isn’t remotely the same as encountering a novel and complex human mind expressing itself wildly in thought and writing. And that’s assuming ChatGPT hasn’t hallucinated anything. So good luck, students and professors both. I trust we will eventually muddle our way through the current moment. Those who want an education only for its “credentials”—not a new phenomenon—have never had an easier time of it, and they will head off into the world to vibe code their way through life. More power to them. But those who value both thought and expression will see the AI “easy button” for the false promise that it is and will continue to do the hard work of engaging with ideas, including their own, in a way that no computer can do for them.