Copyright nanit

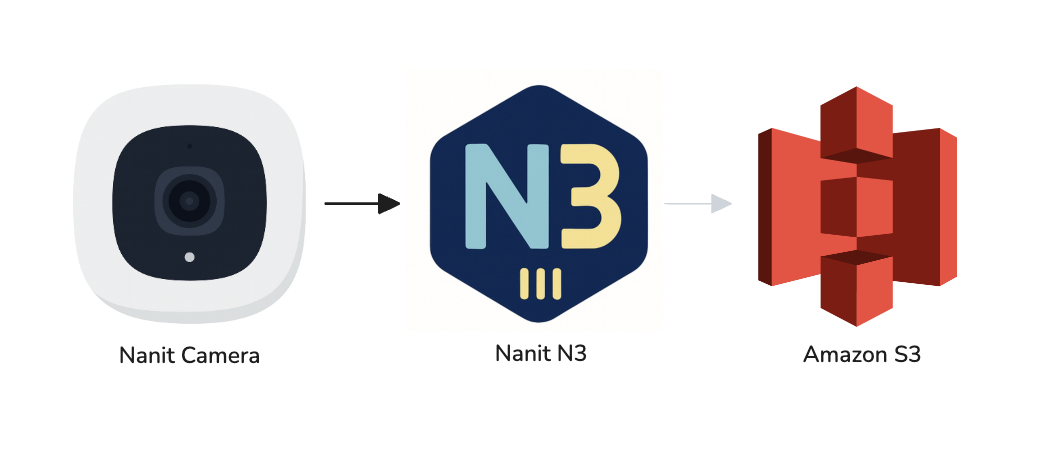

We used S3 as a landing zone for Nanit’s video processing pipeline (baby sleep-state inference), but at thousands of uploads/second, S3’s PutObject request fees dominated costs. Worse, S3’s auto-cleanup (Lifecycle rules) has a 1-day minimum; we paid for 24 hours of storage on objects processed in ~2 seconds. We built N3, a Rust-based in-memory landing zone that eliminates both issues, using S3 only as an overflow buffer. Result: meaningful cost reduction (~$0.5M/year). Part 1: Background High-Level Overview of Our Video Processing Pipeline Cameras record video chunks (configurable duration).For each chunk, the camera requests an S3 presigned URL from the Camera Service and uploads directly to S3.An AWS Lambda posts the object key to an SQS FIFO queue (sharded by baby_uid).Video processing pods consume from SQS, download from S3, and produce sleep states. For a deeper dive, see this post. What We Like About This Setup Landing on S3 + queuing to SQS decouples camera uploads from video processing. During maintenance or temporary downtime, we don’t lose videos; if queues grow, we scale processing.With S3, we don’t manage availability or durability.SQS FIFO + group IDs preserve per-baby ordering, keeping processing nodes mostly stateless (coordination happens in SQS).S3 Lifecycle rules offload GC: objects expire after one day, so we don’t track processed videos. Why We Changed PutObject costs dominated. Our objects are short-lived: videos land for seconds, then get processed. At our scale (thousands of uploads/s), the per-object request charge was the largest cost driver. Increasing chunking frequency (i.e., sending more, smaller chunks) to cut latency raises costs linearly, because each additional chunk is another PutObject request. Storage was a secondary tax. Even when processing finished in ~2 s, Lifecycle deletes meant paying for ~24 h of storage. We needed a design that kept reliability and strict ordering while avoiding per-object costs on the happy path and minimizing “pay-to-wait” storage. Part 2: Planning Guiding Principles Simplicity through architecture: Eliminate complexity at the design level, not through clever implementations.Correctness: A true drop-in replacement that’s transparent to the rest of the pipeline.Optimize for the happy path: Design for the normal case and use S3 as a safety net for edge cases. Our processing algorithms are robust to occasional gaps, so we can prioritize simplicity over building complex guarantees; S3 provides reliability when needed. Design Drivers Short-lived objects: segments live on the landing zone for seconds, not hours.Ordering: strict per-baby sequencing (no processing newer before older).Throughput: thousands of uploads/second; 2–6 MB per segment.Client limits: cameras have limited retries; don’t assume retransmits.Operations: tolerate multi-million-item backlogs during maintenance/scale-ups.No firmware changes: must work with existing cameras.Loss tolerance: very small gaps are acceptable; algorithms mask them.Cost: avoid per-object S3 costs on the happy path; minimize “pay-to-wait” storage. Design at a Glance (N3 Happy Path + S3 Overflow) The Architecture N3 is a custom landing zone that holds videos in memory just long enough for processing to drain them (~2 seconds). S3 is used only when N3 can’t handle the load. Two components: N3-Proxy (stateless, dual interfaces): - External (Internet-facing): Accepts camera uploads via presigned URLs.- Internal (private): Issues presigned URLs to Camera Service.N3-Storage (stateful, internal-only): Stores uploaded segments in RAM and enqueues SQS with a pod-addressable download URL. Video processing pods consume from SQS FIFO and download from whichever storage the URL points to: N3 or S3. Normal Flow (Happy Path) Camera requests an upload URL from Camera Service.Camera Service calls N3-Proxy’s internal API for a presigned URL.Camera uploads video to N3-Proxy’s external endpoint.N3-Proxy forwards to N3-Storage.N3-Storage holds video in memory and enqueues to SQS with a download URL pointing to itself.Processing pod downloads from N3-Storage and processes. Two-Tier Fallback Tier 1: Proxy-level fallback (per-request): If N3-Storage can’t accept an upload whether from memory pressure, processing backlog, or pod failure N3-Proxy uploads to S3 on the camera’s behalf.(Camera got a presigned N3 URL before the failure was detected) Tier 2: Cluster-level reroute (all traffic): If N3-Proxy or N3-Storage is unhealthy, Camera Service stops issuing N3 URLs and returns S3 presigned URLs directly. (All traffic flows to S3 until N3 recovers.) Why Two Components? We split N3-Proxy and N3-Storage because they have different requirements: Blast radius: If storage crashes, proxy can still route to S3. If proxy crashes, only that node’s traffic is affected; not the entire storage cluster.Resource profiles: Proxy is CPU/network-heavy (TLS termination). Storage is memory-heavy (holding videos). Different instance types and scaling requirments.Security: Storage never touches the Internet.Rollout safety: We can update proxy (stateless) without touching storage (holding active data). Validating the Design The architecture made sense on paper, but we had critical unknowns: Capacity & sizing: real upload durations across client networks; how much compute and upload buffer size we need?Storage model: can we keep everything in RAM, or do we need disks?Resilience: how to load balance cheaply and handle failed nodes?Operational policy: GC needs, retry expectations, and whether delete-on-GET is sufficient.Unknown unknowns: what edge cases would emerge when idea meet reality? To de-risk decisions, we ran two tracks during planning: Approach 1: Synthetic Stress Tests We built a load generator to push the system to its limits: varying concurrency, slow clients, sustained load, and processing downtime. Goal: Find breaking points. Surface bottlenecks we hadn’t anticipated. Get deterministic baselines for capacity planning. Approach 2: Production PoC (Mirror Mode) Synthetic tests can’t replicate real camera behavior: flaky Wi-Fi, diverse firmware versions, unpredictable network conditions. We needed in-the-wild data without risking production. Mirror mode: n3-proxy wrote to S3 first (preserving prod), then also to a PoC N3-Storage wired to a canary SQS + video processors.Targeted cohorts: by firmware version / Baby-UID listsData parity: compared sleep states PoC vs. production; investigated any diffs.Observability: per-path dashboards (N3 vs. S3), queue depth, latency/RPS, error budgets, egress breakdown. Feature flags (via Unleash) were critical. We could flip cohorts on/off in real-time; no deployments; letting us test narrow slices (older firmware, weak Wi-Fi cameras) and revert instantly if issues appeared. What We Discovered Bottlenecks: TLS termination consumed most CPU, and AWS burstable networking throttled us after credits expired.Memory-only storage was viable. Real upload-time distributions and concurrency showed we could fit the working set in RAM with safe headroom; disks not required.Delete-on-GET is safe. We did not observe re-downloads; retries happen downstream in the processor, so N3 doesn’t need to support download retries.We need lightweight GC. Some segments get skipped by processing and would never be downloaded/deleted; added a TTL GC pass to clean stragglers. These findings shaped our implementation: memory-backed storage, network- optimized instances with TLS optimization, and delete-on-GET with TTL GC for stragglers. Part 3: Implementation Details DNS Load Balancing n3-proxy is a DaemonSet on dedicated nodes, one pod per node to maximize network and CPU resources for TLS termination. We need node-level load balancing and graceful restarts. An AWS Network Load Balancer would work, but at our throughput (thousands of uploads/second, sustained multi-GB/s), the combination of fixed costs plus per-GB processed fees becomes expensive. Instead, we use DNS-based load balancing via Route53 multi-value A records, which is significantly cheaper. For each node we create a MultiValue record that contains a single IP.Each record has a health check that hits an external readiness endpoint.A records use a short 30-second TTL. This gives us: If a node fails, it’s taken out of the pool and cameras stop uploading to it.Because the external readiness endpoint is also used as the Kubernetes readiness probe, marking a pod Not Ready during rollouts automatically removes it from DNS. Rollout process n3-proxy pods have a graceful shutdown mechanism: On SIGTERM, the pod enters paused mode.Readiness becomes Not Ready, but uploads are still accepted.Wait 2× DNS TTL (e.g., 60s) so the DNS health check removes the node and camera DNS caches update.Drain active connections, then restart.On startup, wait for health checks to pass and for client DNS TTLs to expire before rolling to the next pod (lets the node rejoin the pool). Networking Limitations When doing initial benchmarks to size the cluster, we saw a surprising pattern: runs started near ~1k RPS, then dropped to ~70 RPS after ~1 minute. Restarting didn’t help; after waiting and rerunning, we briefly saw ~1k RPS again. It turns out that when AWS says an instance can do “Up to 12.5 Gbps”, that’s burstable networking backed by credits; when you’re below the baseline, you accrue credits and can burst for short periods. Baseline depends on instance family and vCPUs: Non–network-optimized: ~0.375 Gbps/vCPUNetwork-optimized (suffix “n”): ~3.125 Gbps/vCPU And for instances that don’t say “Up to,” you get the stated Gbps continuously. Conclusion: our workload is steady, so bursts don’t help. We moved to network optimized c8gn.4xlarge nodes, which provide 50 Gbps each, giving us the sustained throughput we need. HTTPS, rustls, and Graviton4 Initially, for simplicity, we used a stunnel sidecar for HTTPS termination, but early stress testing showed HTTPS was the main CPU consumer and primary bottleneck. We made three changes: Moved from stunnel to native rustls.Upgraded from Graviton3 to Graviton4 instances.Compiled n3-proxy with target-cpu and crypto features enabled. These changes yielded ~30% higher RPS at the same cost. Outgoing Traffic Costs We assumed that since we only receive uploads (ingress is free) and don’t send payloads to clients, egress would be negligible. Post-launch, we saw non-trivial outbound traffic. TLS handshakes Each upload opens a new TLS connection, so a full handshake runs and sends ~7 KB of certificates. In theory we could reduce this with smaller (e.g., ECDSA) certs, session resumption/tickets, or long-lived connections; but given our constraint of not changing camera behavior, we accept this overhead for now. Surprisingly, TLS handshakes were only a small part of the outbound bytes. A tcpdump showed many 66-byte ACKs: This was a short traffic capture: total_bytes = 37,014,432ack_frame_bytes = 31,258,550 (84.4%)data_frame_bytes = 5,755,882 (15.6%) ~85% of outbound bytes were ACK frames. With ~1500-byte MTUs and frequent ACKs, overhead adds up. While we can’t easily reduce the number of ACKs, we can make each ACK smaller by removing TCP timestamps (−12 bytes/ACK): Kubernetes init-container: This isn’t without risk: with high byte counts on the same socket, sequence numbers can wrap and delayed packets may be mis-merged, causing corruption.Mitigations: (1) new socket per upload; (2) recycle n3-proxy ↔ n3-storage sockets after ~1 GB sent. Memory Leak After the initial launch, we saw steady n3-proxy memory growth. Even after traffic stopped, the process returned to an ever-higher baseline — so it wasn’t just the OS holding freed pages. jemalloc stats showed referenced memory constantly increasing. Using rust-jemalloc-pprof we profiled memory in production and identified growth in per-connection hyper BytesMut buffers. Since we handle large uploads over variable networks, some client connections stalled mid-transfer and never cleaned up. The per-connection hyper buffers (BytesMut) stuck around and memory kept climbing. When we Terminated connections idle >10 minutes, memory dropped by ~1 GB immediately; confirming the leak was from dangling sockets. Fix: make sockets short-lived and enforce time limits. Disable keep-alive: close the connection immediately after each upload completes.Tighten timeouts: set header/socket timeouts so stalled uploads are terminated and buffers are freed. We started with the simplest path: in-memory storage. It avoids I/O tuning and lets us use straightforward data structures. Each video upload increments bytes_used ; each download deletes the video and decrements it. Above ~80% capacity, we start rejecting uploads to avoid OOM and signal n3-proxy to stop signing upload URLs. The control handle lets us manually pause uploads and garbage collection. Graceful Restart With memory-only storage, restarts must not drop in-flight data. Our graceful restart process: SIGTERM to a pod (StatefulSet rolls one pod at a time).Pod becomes Not Ready and leaves the Service (no new uploads).It continues serving downloads for already-uploaded videos.Once downloads quiesce (no recent reads → processing drained),Wait for any open requests to completeRestart and move to the next pod. Under normal operation pods drain in seconds. We use two cleanup mechanisms: Delete on download We delete videos immediately after download. In the PoC, we saw zero re-downloads; video processors retry internally. This eliminates the need to hold data or track “processed” state. TTL GC for stragglers Deleting on download doesn’t cover segments skipped by the processor (never downloaded → never deleted). We added a lightweight TTL GC: periodically scan the in-memory DashMap and remove entries older than a configurable threshold (e.g., a few hours). Maintenance mode During planned processing downtime, we can temporarily pause GC via an internal control so videos aren’t deleted while consumption is stopped. Part 4: Conclusion By using S3 as a fallback buffer and N3 as the primary landing zone, we eliminated ~$0.5M/year in costs while keeping the system simple and reliable. The key insight: most “build vs. buy” decisions focus on features, but at scale, economics shift the calculus. For short-lived objects (~2 seconds in normal operation), we don’t need replication or sophisticated durability; simple in-memory storage works. But when processing lags or maintenance extends object lifetime, we need S3’s reliability guarantees. We get the best of both worlds: N3 handles the happy path efficiently, while S3 provides durability when objects need to live longer. If N3 has any issues; memory pressure, pod crashes, or cluster problems; uploads seamlessly fail over to S3. What Made This Work Defining the problem clearly upfront: constraints, assumptions, and boundaries prevented scope creep. Validating early with a mirror-mode PoC let us discover bottlenecks (TLS, network throttling) and validate assumptions before committing. This prevented overengineering and backtracking. When Should You Build Something Like This? Consider custom infrastructure when you have both: sufficient scale for meaningful cost savings, and specific constraints that enable a simple solution. The engineering effort to build and maintain your system must be less than the infrastructure costs it eliminates. In our case, specific requirements (ephemeral storage, loss tolerance, S3 fallback) let us build something simple enough that maintenance costs stay low. Without both factors, stick with managed services. Would we do it again? Yes. The system has been running reliably in production, and the fallback design lets us avoid complexity without sacrificing reliability.