Copyright Interesting Engineering

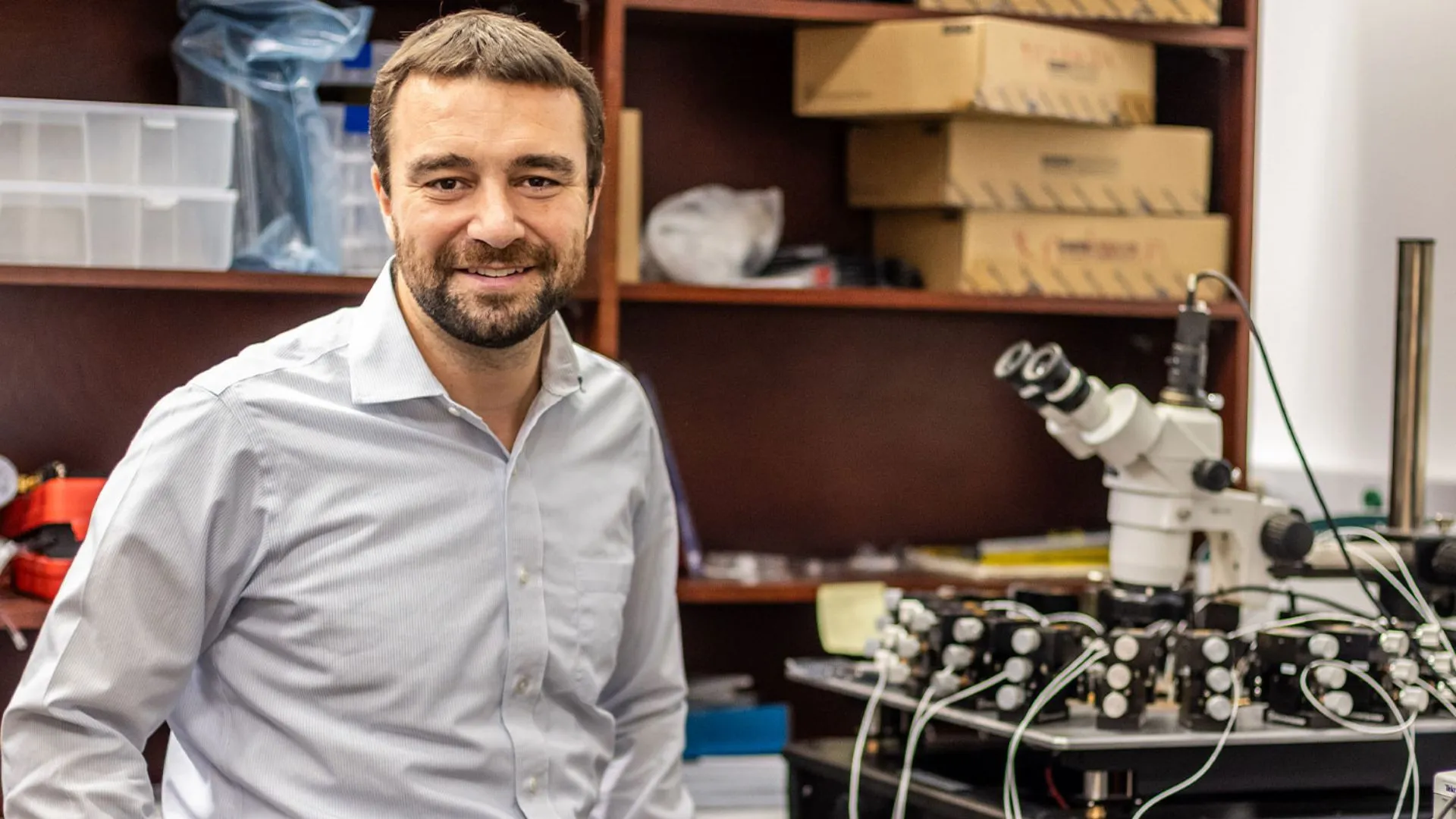

A team of engineers at The University of Texas at Dallas has developed a small-scale computer prototype that learns more like the human brain. The brain-inspired hardware can recognize patterns and make predictions using far fewer training computations than conventional AI systems. Dr. Joseph S. Friedman, associate professor of electrical and computer engineering and leader of the NeuroSpinCompute Laboratory, said the breakthrough could transform how machines learn. “Our work shows a potential new path for building brain-inspired computers that can learn on their own,” he said. “Since neuromorphic computers do not need massive amounts of training computations, they could power smart devices without huge energy costs.” Traditional computers separate memory from processing, forcing data to move constantly between the two. This design makes artificial intelligence systems energy-intensive and heavily reliant on vast labeled datasets. Training large models can cost hundreds of millions of dollars. Neuromorphic computing works differently. It takes inspiration from the brain, where neurons and synapses process and store information together. Synapses strengthen or weaken based on activity, allowing continuous learning. Friedman’s work builds on a principle from neuropsychologist Donald Hebb, known as Hebb’s law: neurons that fire together, wire together. “The principle that we use for a computer to learn on its own is that if one artificial neuron causes another artificial neuron to fire, the synapse connecting them becomes more conductive,” Friedman said. Using magnetic tunnel junctions At the core of the prototype are magnetic tunnel junctions (MTJs), nanoscale devices made of two magnetic layers separated by an insulator. Electrons can pass through the barrier more easily when the magnetic layers align in the same direction and less easily when they do not. By linking MTJs in a network, the researchers created a system that adjusts its own connections as signals pass through it. Some pathways strengthen while others weaken, similar to how the brain’s synapses evolve during learning. MTJs also offer reliable data storage because of their binary switching behavior, addressing a major limitation that has long hindered other neuromorphic approaches. Toward scalable brain-like machines Friedman’s next goal is to scale up the prototype so it can handle more complex learning tasks. A larger neuromorphic system could process data in real time while consuming only a fraction of the power used by today’s AI chips. Such efficiency could make it possible for phones, wearables, and other edge devices to run advanced AI models locally without relying on massive cloud servers. It could also ease the growing strain on global data centers, which currently consume vast amounts of energy for AI computation. “If successful, this technology could allow smart devices to think and adapt without needing to constantly connect to the cloud,” Friedman said. The study is published in the journal Communications Engineering.