Copyright Interesting Engineering

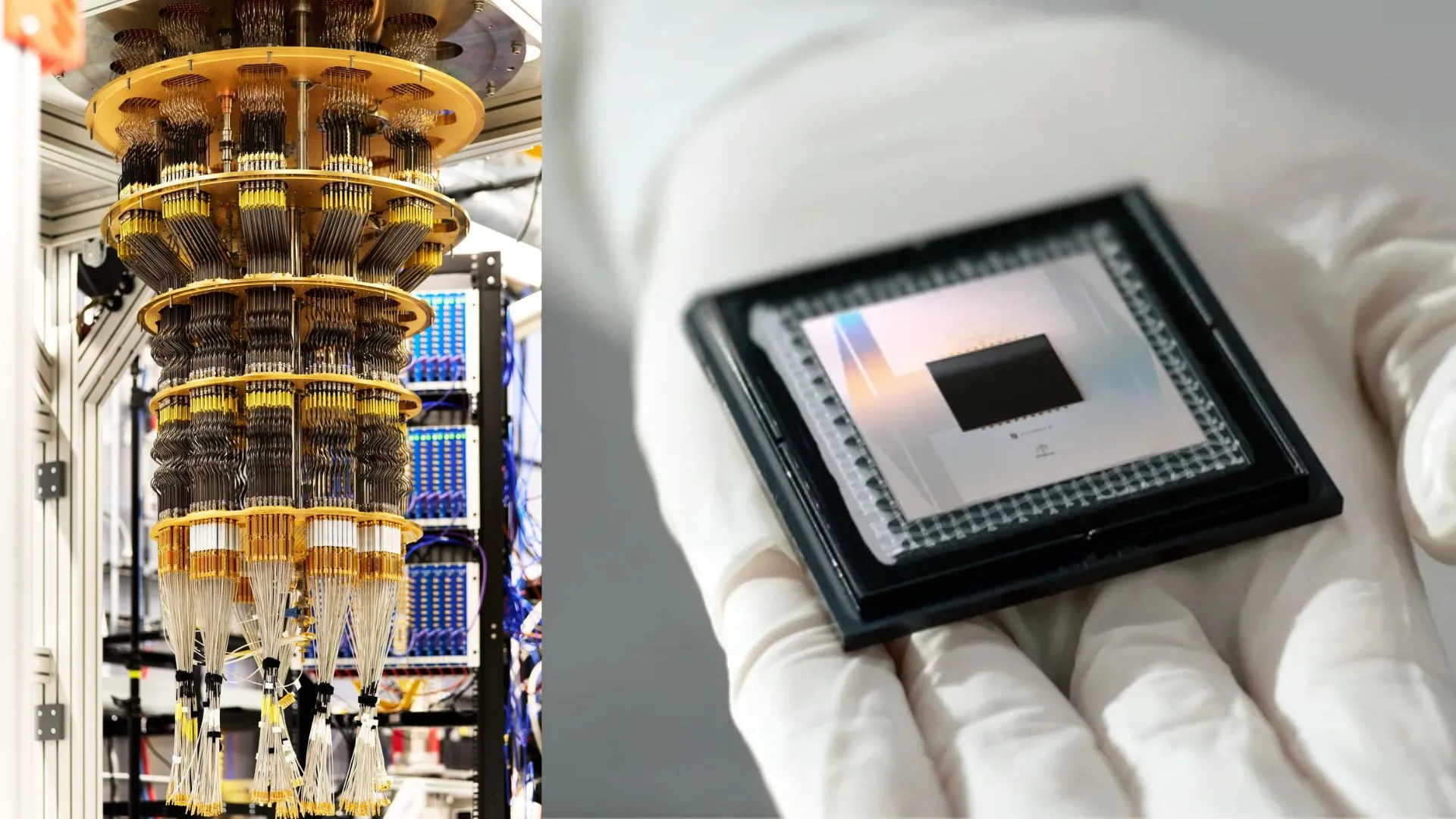

Google’s Quantum AI team claims its latest algorithm could move quantum computing closer to real-world impact. The new method, called Quantum Echoes, may one day help scientists design better drugs, catalysts, polymers, and batteries. Although the early experiments have not yet demonstrated a “quantum advantage,” researchers believe the results mark a turning point toward practical benefits. Quantum computers rely on qubits, components that can process information exponentially faster than classical bits. The more qubits connected, the greater the system’s potential power. Google first made headlines in 2019 when its 54-qubit Sycamore processor solved a problem in 200 seconds that would have taken a conventional supercomputer 10,000 years. In 2024, its 105-qubit Willow chip performed a benchmark calculation that would have taken Frontier, the world’s fastest supercomputer, 10 septillion years. However, critics argued those benchmarks proved little. Google’s earlier claims of “quantum supremacy” relied on a process called random circuit sampling, a complex but useless exercise with no real-world application. The randomness of the test also made it hard to verify results between different quantum systems. Now, Google says its Quantum Echoes algorithm addresses that flaw. When the team ran it on 65 of Willow’s qubits, it completed the task about 13,000 times faster than the best classical counterpart on Frontier. Most importantly, it produced verifiable results that could be reproduced on multiple quantum processors. “The key aspect of verification is that it can lead to applications,” says Thomas O’Brien, a staff research scientist at Google Quantum AI. “If I can’t prove to you that the data is correct, how can I do anything with it?” Inside the Quantum Echoes algorithm The algorithm works in three stages. First, it performs a series of quantum operations, such as simulating how a molecule behaves. Next, it slightly perturbs one of the qubits involved. Finally, it reverses the original operations and compares both sets of results. This forward and backward process helps reveal how small changes affect an entire molecular system, something that overwhelms even the fastest classical supercomputers. O’Brien likens the qubit disturbance to the butterfly effect, where a small perturbation ripples across the system. Nobel laureate Michel Devoret, Google’s chief scientist for quantum hardware, credits Willow’s 105-qubit capacity and low error rate of 0.1 percent for making the experiment possible. O’Brien notes the difference in precision compared with Google’s 2019 demonstration. Back then, “only 0.1 percent of the data gathered was correct,” he says. This time, “only 0.1 percent of the data could be wrong.” Toward real-world applications They believe the Quantum Echoes algorithm, formally known as an out-of-time-order correlator, could eventually enhance nuclear magnetic resonance (NMR) spectroscopy, a molecular imaging technique similar to MRI. In early tests with up to 15 qubits, the algorithm produced accurate molecular models. “While things are still at an early stage, this methodology could yield broad applications in the future given NMR’s wide use across chemistry, biology, and materials science,” says Ashok Ajoy, an assistant professor at the University of California, Berkeley. O’Brien admits the results are not beyond classical capabilities yet. Still, he expects future refinements in error correction could help achieve quantum advantage on real problems. Hartmut Neven, founder and manager of Google Quantum AI, remains optimistic. “We continue to be optimistic that within five years, we will see real-world applications that are only possible with quantum computers,” he says. The study is published in the journal Nature.