Copyright Inc. Magazine

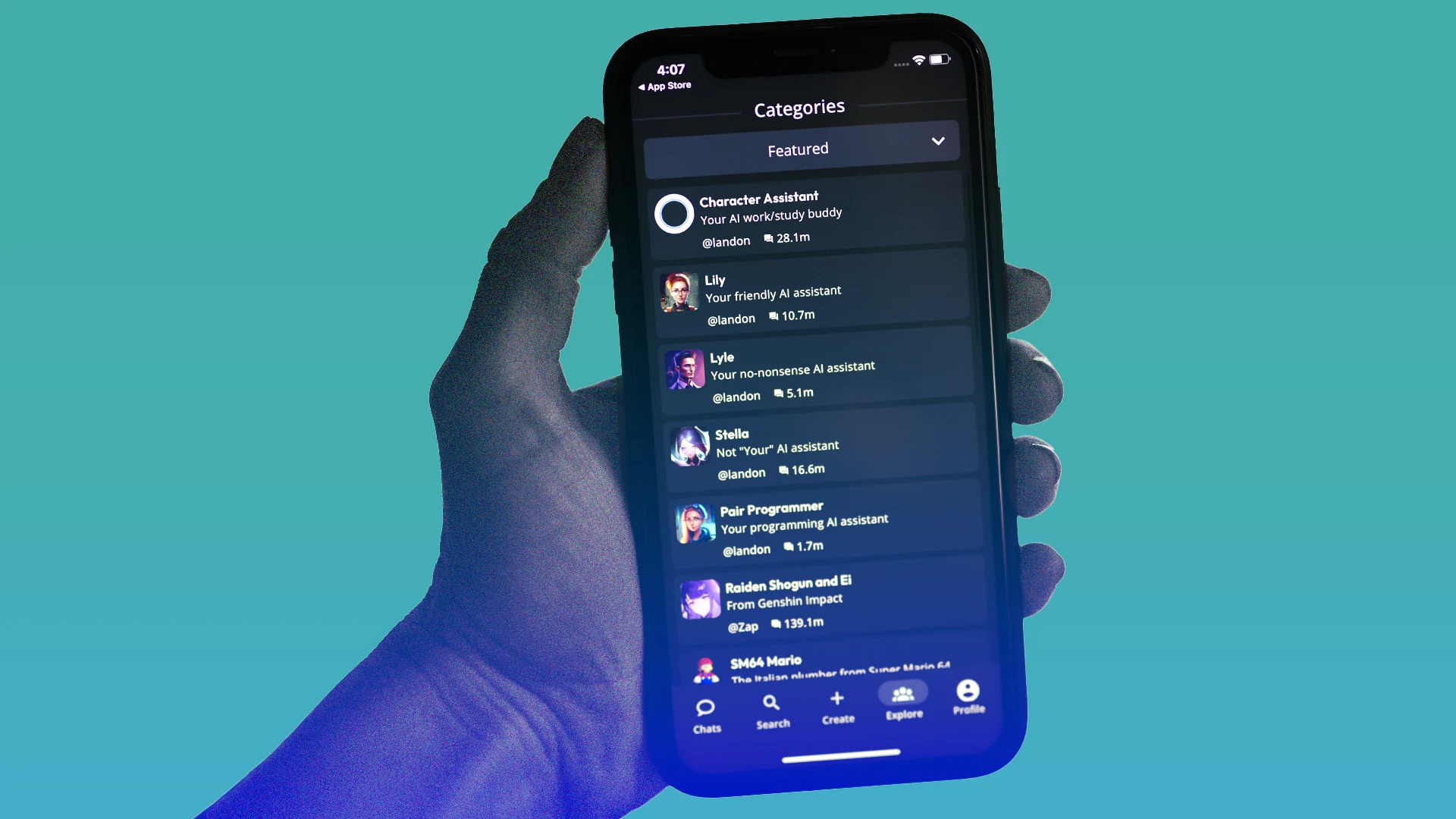

“We do not take this step of removing open-ended Character chat lightly – but we do think that it’s the right thing to do given the questions that have been raised about how teens do, and should, interact with this new technology,” the company said. In addition to removing access for users under 18, the company announced that they are working on age verification measures, and that they are establishing a non-profit called the AI Safety Lab that will be focused on “innovating safety alignment for next-generation AI entertainment features.” Previous safety measures taken by the company include a notification sending users to the National Suicide Prevention Lifeline when self-harm and suicide are mentioned during chatbot conversations. The decision comes after lawsuits against Character.AI filed by families and parents alleging that the company was liable for the death of their children. In August, Ken Paxton, the Texas attorney general, announced an investigation into the company and Meta AI Studio for “potentially engaging in deceptive trade practices and misleadingly marketing themselves as mental health tools.” Noam Shazeer and Daniel De Freitas left Google in 2021 to found Character.AI, after Google didn’t release their chatbot to the public, due to worries about safety and fairness, according to The Wall Street Journal. Google hired the pair back after Character.AI signed a $2.7 billion licensing deal in 2024. Character.AI isn’t the only company that offers distinct AI personas. Talkie, Replika, Chai, and Nomi are other examples. AI used for these purposes has become the subject of media scrutiny in the past year, with people developing an emotional connection with their chatbots, chatbots becoming delusional and even cases of unauthorized digital versions of dead people. This decision from Character.AI follows similar ones from other companies. Meta announced earlier this month safety features that will allow parents to block their children’s access to AI characters on Instagram. OpenAI introduced similar controls in late September.