SANTA CLARA, California – Sept 24 (Reuters) – The computing chips that power artificial intelligence consume a lot of electricity. On Wednesday, the world’s biggest manufacturer of those chips showed off a new strategy to make them more energy efficient: Using AI-powered software to design them.

Sign up here.

Nvidia’s current flagship AI servers, for example, can consume as much as 1,200 watts during demanding tasks, which would be the equivalent of the power used by 1,000 U.S. homes if run continuously.

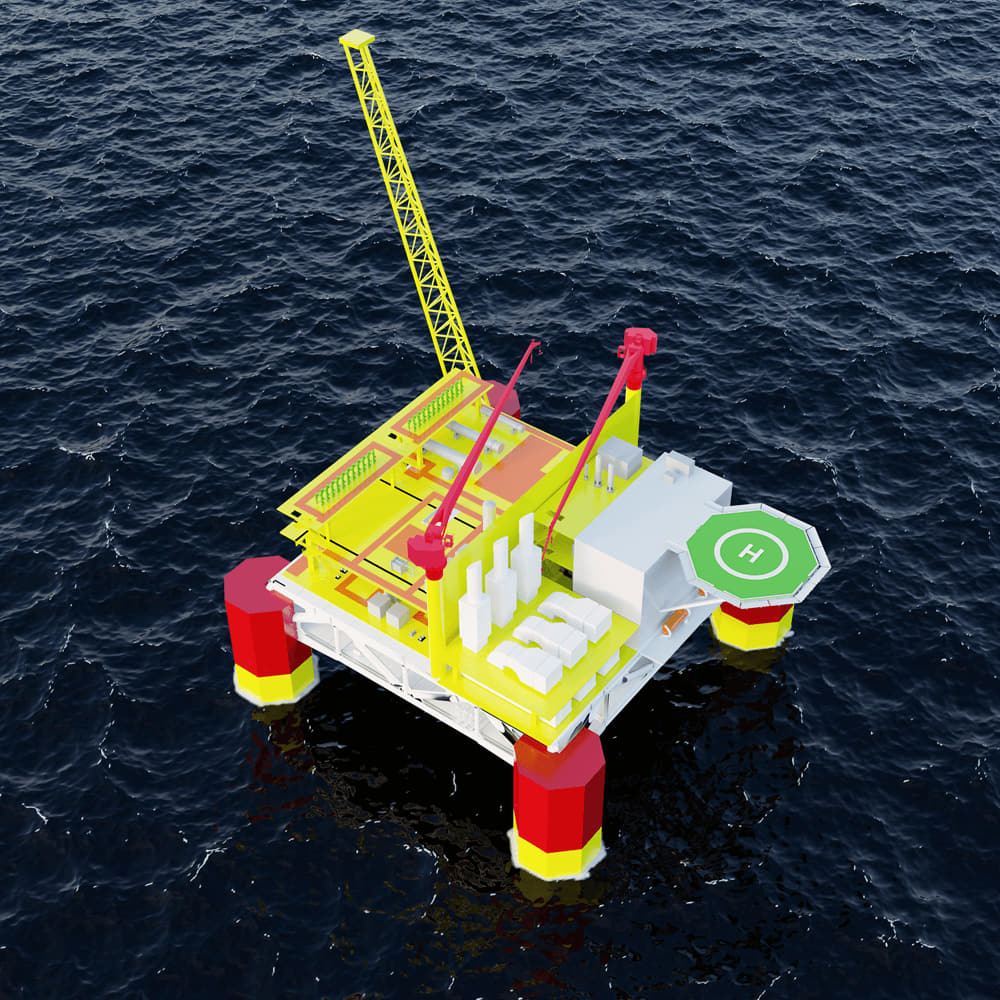

The gains TSMC is hoping to achieve come from a new generation of chip designs in which multiple “chiplets” – smaller pieces of full computing chips – using different technologies are packaged together to make one computing package.

For some of the complex tasks in designing chips, the tools from TSMC’s software partners found better solutions than TSMC’s own human engineers – and did so much faster.

“That helps to max out TSMC technology’s capability, and we find this is very useful,” Jim Chang, deputy director at TSMC for its 3DIC Methodology Group, said during a presentation describing the findings. “This thing runs five minutes while our designer needs to work for two days.”

The current way of manufacturing chips is hitting limits, such as the ability to move data on and off chips using electrical connections. New technologies, such as moving information between chips with optical connections, need to be made reliable enough to use in massive data centers, said Kaushik Veeraraghavan, an engineer in Meta Platforms’ infrastructure group who gave a keynote address.

“Really, this is not an engineering problem,” Veeraraghavan said. “It’s a fundamental physical problem.”

Reporting by Stephen Nellis in Santa Clara, California; Editing by Stephen Coates