This investigative report contains AI-generated images and videos created to show you the best ways to identify real versus AI.

This is the fourth part of InvestigateTV’s mAnIpulated series, examining the ways in which AI is impacting our everyday lives.

Visit the series homepage to follow each national release as well as reports from your local Gray Media stations.

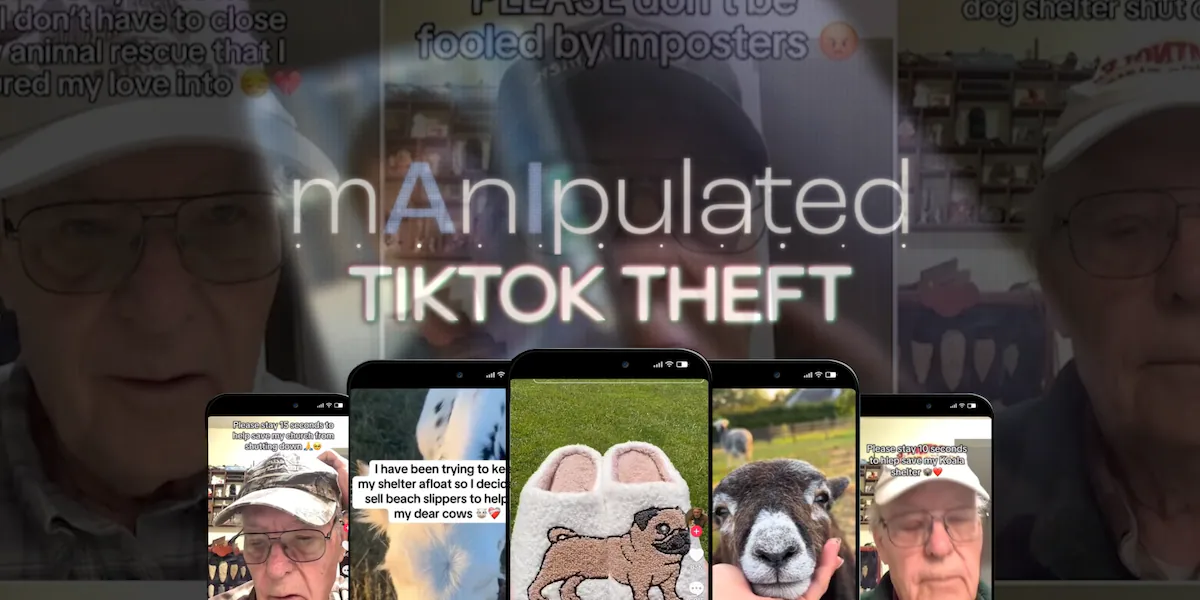

EAST TAWAS, Mich. (InvestigateTV) — An 84-year-old TikTok creator who built a following by sharing daily jokes has become the unwitting face of multiple online scams. These scams use artificial intelligence to steal his likeness and voice to sell products under false charitable pretenses.

Charles Ray, who has amassed more than 15,000 followers and 150,000 likes in less than a year on TikTok, discovered scammers were using AI technology to create fake videos of him.

The videos displayed the fake version of him claiming to run a struggling animal rescue, farm, and church to sell products ranging from dog slippers to bible bags.

“I was dumbfounded. I couldn’t believe it. That sounds just like me,” Ray said after viewing one of the fraudulent videos.

“It’s a scam. Somebody has stolen my image and my content, and they’re trying to get your money, and don’t fall for it.”

The scheme represents a growing trend of AI-enabled fraud that federal investigators say is challenging law enforcement agencies nationwide.

Multiple fake personas, same stolen face

The scammers have created numerous fake scenarios using Ray’s likeness across multiple TikTok channels.

In one video, an AI-generated version of Ray claims he opened a dog rescue after retirement and is “currently struggling to make ends meet and keep it open.”

The fake Ray then promotes handmade dog slippers as a way to support the nonexistent rescue.

Other fraudulent videos show the AI version of Ray claiming to run a struggling church, a farm, and even a koala rescue, each time promoting different products as a way to help these fictional charitable causes.

The real Ray, who started posting content to make people smile, said he doesn’t know how to make slippers and has never operated any of the businesses or charities featured in the fake videos.

FBI confirms criminal activity

Ronan Byrne, unit chief of the FBI’s Cyber Enabled Fraud and Money Laundering Unit, confirmed that using AI to commit fraud constitutes criminal activity.

“Yes, the use of AI images used as jokes and satire is obviously not, but using AI to commit a fraud is a crime,” Byrne said during an interview at FBI headquarters in Washington, D.C.

Byrne said the FBI receives thousands of complaints daily about AI-related fraud cases, though tracking down perpetrators presents significant challenges.

“It requires a lot of work. The technology, as well as the threat actors being overseas, creates potential challenges for the investigators,” Byrne explained.

“The scale and speed that these crimes move at now, cyber-enabled fraud in general, is extremely challenging for law enforcement.”

Our investigation revealed that the fraudulent videos direct viewers to real websites selling actual products, but under false charitable pretenses. The sites offer items including cow slippers, kitty bags, cross necklaces, and dog slippers.

To test the operation, we purchased a pair of dachshund slippers for $34.98 using a prepaid debit card.

Two weeks later, a package arrived containing slippers labeled “Made in China,” not the handmade items promoted in the fake videos.

“You know what surprises me is that you actually got something,” Ray said when shown the delivered product.

“I feel bad that you wasted $40.”

Byrne confirmed this type of scheme falls under FBI jurisdiction.

How Did TikTok Respond?

Despite the criminal nature of the activity, the fraudulent videos persist on social media platforms, sometimes generating hundreds of thousands of views over months.

Ray said he attempted to report the fake videos to TikTok, but the social media platform took no action.

“They come back and say, ‘We’ve investigated it and it does not violate any of our community standards.’ So they don’t do anything,” Ray said.

TikTok did not respond to our requests for comment about the scam or Ray’s situation.

Legislative efforts stalled

The case has drawn attention from lawmakers who say current laws provide insufficient protection against AI-enabled identity theft.

Senator Josh Hawley said the situation demonstrates why legal frameworks need updating.

“We’ve got to protect the rights of people and their name, their image, their likeness,” Hawley said. “We’ve got to be able to tell these platforms like TikTok, like Instagram, whomever, YouTube, you’ve got to be able to go to them and say, this is fake, you need to take it down. And if they won’t take it down, you ought to be able to sue them and get damages against them.”

Currently, social media companies face no legal repercussions for hosting such content, Hawley confirmed.

Proposed legislation like the “No AI Fraud Act” introduced in 2024 would allow people to sue those who knowingly publish their likeness without consent, but the bill has not advanced.

Scammers adapt to countermeasures

When Ray posted videos warning his followers about the fake content, scammers quickly adapted by using AI to steal those warning messages as well.

In one instance, after Ray posted a video stating, “Someone else has been stealing the first part of my video,” scammers created an AI version that began with the same warning before transitioning into another fake charity pitch.

“This shelter is my dream come true. It breaks my heart to see my videos being used by others without asking,” the AI-generated Ray said in the manipulated video.

Ray expressed concern that the fraud could damage the trust he has built with his legitimate followers.

“Those people come on there to hear a joke, no matter how corny it is. But they hear it and they trust me. And something like that? And then they see that, and there goes the trust,” Ray said.

Ray is not the only content creator affected by this type of fraud. Investigators found multiple other social media users whose images and videos have been stolen and manipulated with AI to promote products using false charitable claims.

The FBI’s Byrne said people whose likeness is being used without permission should report incidents to the Internet Crime Complaint Center at IC3.gov and contact the platforms hosting the fraudulent content.

Warning signs for consumers

Experts identified several red flags that can help consumers identify these scams:

No specific name provided for the alleged charity, shelter, or rescue organization

Lack of contact information on associated websites

Vague or emotional appeals without verifiable details

Products promoted as “handmade” but actually mass-produced overseas

The website where we purchased the dog slippers has since been taken down, but many other sites promoted through AI-generated endorsements remain active and continue selling products.

Despite considering deleting his channel entirely, Ray decided to continue creating content for his followers while using his platform to raise awareness about AI fraud.

“All I want to do, I found this out a long time ago, is make people smile. Because if you smile for a minute, you’re going to forget all the problems you have, and I know people have problems.”

Put Your Skills to the Test

Can you spot the AI-generated images in our interactive digital game? You can play this game and more at our mAnIpulated series homepage.

Watch more from the mAnIpulated: A mIsinformAtIon nAtIon Series

This is the fourth story of InvestigateTV’s mAnIpulated: A mIsinformAtIon nAtIon series.

September 29, 2025: TikTok Faux: How scammers use AI to imitate popular creators, sell fake products

September 12, 2025:Fashion, graphic designers say their work is being stolen, marketed with AI

September 5, 2025:AI scammers target sports fans with celebrity deepfakes

August 25, 2025:AI or Real? How you can spot real content versus AI-manipulated fakes

Anyone who believes their image or likeness is being used fraudulently, or who has purchased products based on AI-manipulated endorsements, can report the incidents to the FBI’s Internet Crime Complaint Center at IC3.gov.