Copyright forbes

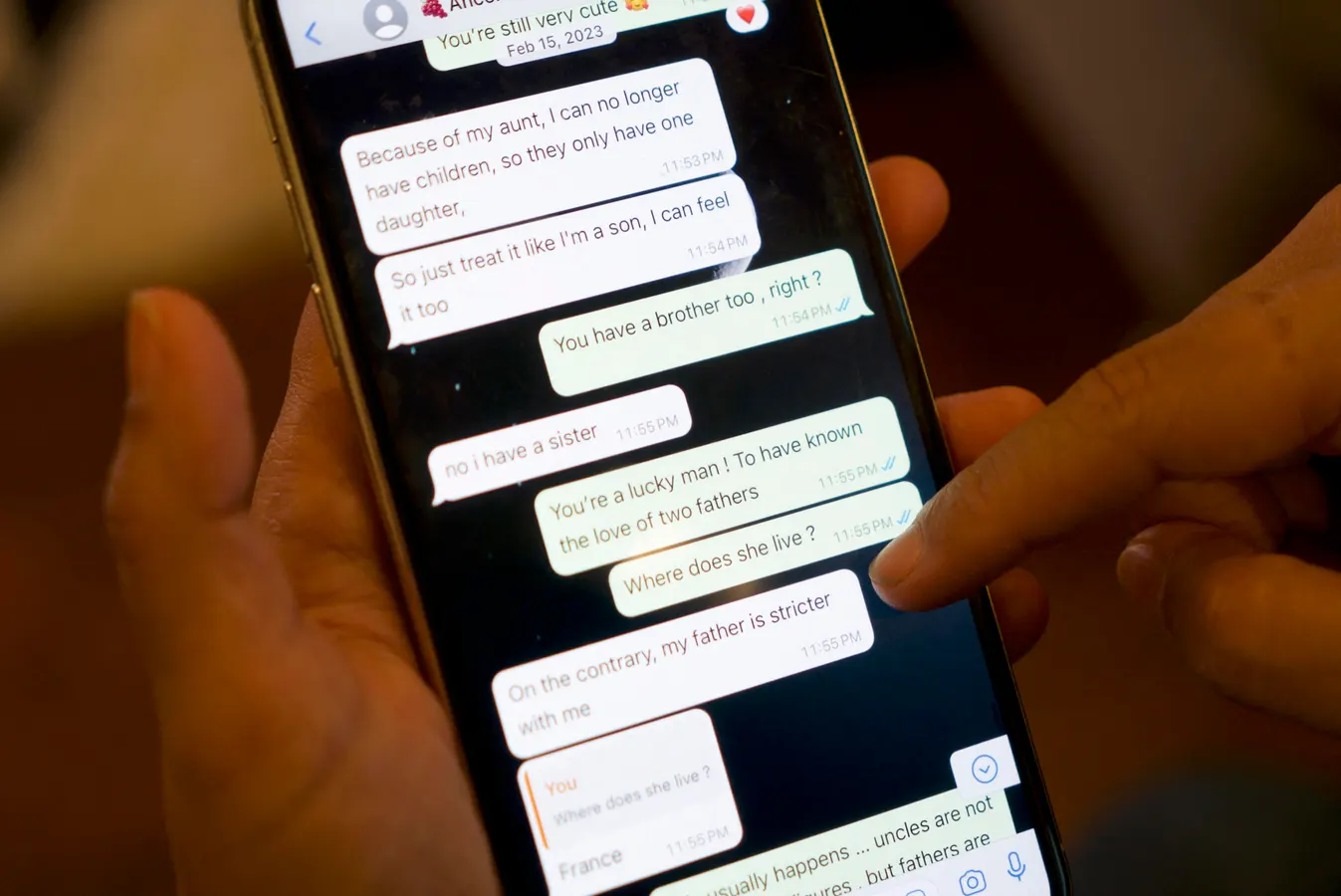

Messages that Shreya Datta, a tech professional who was a victim of an online scam known as "pig butchering," exchanged with a person who would later turn out to be a scammer are displayed on her phone in Philadelphia, Pennsylvania, on February 9, 2024. The "wine trader" wooed her online for months with his flirtatious smile and emoji-sprinkled texts. Then he went for the kill, defrauding the Philadelphia-based tech professional out of $450,000 in a cryptocurrency romance scam. The con, which drained Datta, 37, of her savings and retirement funds while saddling her with debt, involved the use of deepfakes and a script so sophisticated that she felt her "brain was hacked." (Photo by Bastien INZAURRALDE / AFP) (Photo by BASTIEN INZAURRALDE/AFP via Getty Images) AFP via Getty Images Young Americans, not retirees, are now the hardest hit by texting and messaging scams, according to Consumer Reports’ recently published annual Consumer Cyber Readiness Report. That’s not the profile anyone expected. These are the so-called digital natives, raised on password hygiene and phishing awareness. Yet they’re falling for scams at record rates. The reason is that modern scams ranging from online extortion (or the more salaciously named sextortion) scams, to fake job or brand ambassador scams that promise quick riches to “too good to be true” online retail scams don’t prey on the same online hygiene that older scams like phishing and password theft relied on. The users are convinced to be willing participants, at least until the attacker makes off with their money (or sadly something worse in the case of sextortion). The report’s recommendations are familiar: use password managers, turn on multi-factor authentication, install anti-tracking browser extensions. It’s sensible advice, but it doesn’t address AI threats. And if you run a business, you should be paying attention because very similar attack techniques are being used to try to get your users credentials as a starting point for a breach. The Myth of ‘Good Digital Hygiene’ For years, the security industry has told users that discipline beats deception. The problem is that scam believability has evolved at hyperspeed thanks to AI. The newest generation of scams isn’t about clicking a bad link. It’s about being convinced that the voice, face or text on the other end is real. Personalized, AI-driven impersonation is now cheap, convincing and scalable. Password managers, encrypted drives and privacy extensions all help with breach prevention, but they do nothing to stop you from voluntarily giving attackers the personal information they want. MORE FOR YOU Even the Consumer Reports data shows the limits of these habits. You can’t password-manage your way out of a phone call from someone who sounds like your CEO. Why Training Doesn’t Work Meanwhile, a team at the University of California, San Diego, recently tested whether phishing and scam training reduces employee susceptibility. The answer was no. Humans are pattern-recognition machines, and attackers know it. They build campaigns to exploit that predictability. Training tries to make people spot anomalies in the scammer’s communications. AI used by scammers makes those anomalies disappear by generating much more realistic and believable communications that authentically sound like the person or institution they are imitating. For consumers, the best advice now is simple: never trust inbound communication. Hang up, look up the contact info yourself and call back through a verified number. It’s inconvenient, but it eliminates the attacker’s most valuable weapon, control of the conversation. For enterprises, that’s much harder to do. From Text Scams to Help Desk Breaches Everything that makes these scams effective at the consumer level works even better inside an enterprise. Attackers have discovered that help desks, HR departments and finance teams are just as susceptible to social pressure as consumers are to fear or sympathy. When a caller impersonates a CFO and demands an urgent wire transfer, the employee knows there’s a process to follow. But under pressure, they improvise. That’s where the breach begins, not in the code, but in the exception. Organizations love to say, “follow process,” but few reward it when it causes delay. The fastest way to stop these attacks is to do the opposite: celebrate employees who slow things down. Make it a badge of honor to hold the line when something feels wrong. If a company doesn’t have a clear policy for authenticating requests that fall outside normal workflows, it’s already exposed. The Industry’s Long Memory and Short Attention Span Social engineering isn’t new. Kevin Mitnick, who was famously convicted of hacking into dozens of corporate computer systems was using it decades ago. The difference is scale. Today’s scammers don’t need to con their way into a single company. They can deploy AI agents that do it thousands of times a day. The hacking group Scattered Spider used these same tactics to breach major casinos in September 2023. Its playbook wasn’t technical genius. It was human manipulation: impersonate IT, pressure staff, gain access, escalate privileges. AI simply industrializes the technique. What Comes Next? Security awareness programs are fighting an outdated war. The next one will be waged in real time across voice, video and chat. Companies will need technology that can detect emotional manipulation and behavioral anomalies in communication, not just malware in email. That’s starting to change. New platforms are training models to recognize speech patterns that indicate social pressure or deception, for example. (Disclosure: I have invested in two companies tackling this problem: one on the corporate/helpdesk side and one on the consumer side. Neither has come out of stealth yet, but I’m optimistic they and others like them will make a difference.) These solutions are early, but they represent a shift in mindset. The problem isn’t bad passwords or lazy users. It’s that the human layer of security has become the primary attack surface. Stay Suspicious If you take one lesson from the Consumer Reports study, it’s this: everyone is a target, and most of the defenses you’ve been told to deploy won’t help. Scams have become a machine-learning problem, not an awareness or hygiene issue. Until defenses catch up, the only real advice for both consumers and companies is to assume every inbound communication is a setup. Verify, delay and reward skepticism. Attackers are betting you won’t. Editorial StandardsReprints & Permissions