By Tanya Pandey

Copyright indiatimes

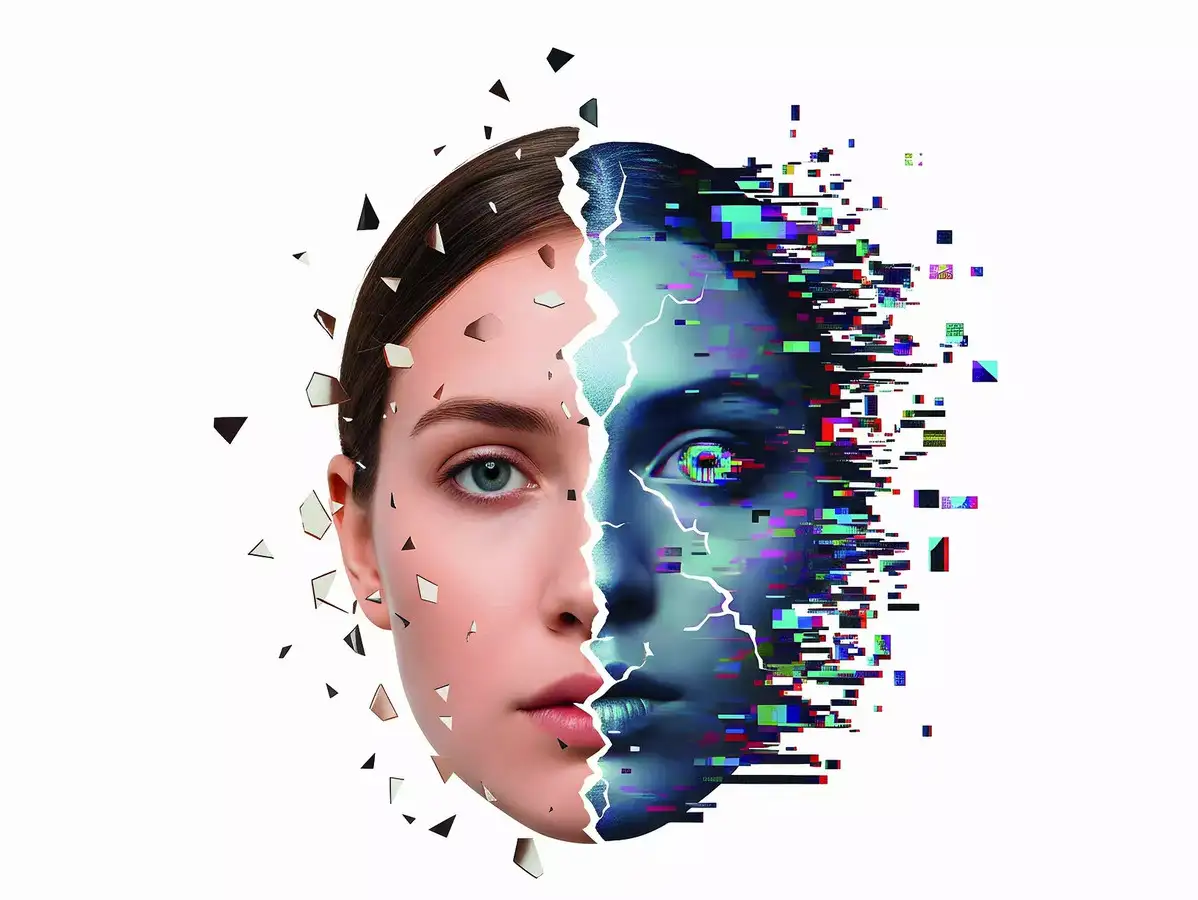

Imagine a cricketer or a film star endorsing a product without their knowledge, or a CEO seeking to persuade you to put your hard-earned money into a fraudulent scheme through a bogus phone call. Deepfakes produced with the use of AI are increasingly fuelling fraud worldwide. With celebrities, including politicians and business leaders, enjoying a cult following in India, the country faces heightened risk in this regard, according to experts.Karan Johar, Aishwarya Rai-Bachchan, and Abhishek Bachchan have approached the courts to protect their digital likeness from unauthorised use. While Bollywood grabs the spotlight, business leaders are vulnerable too, since their voice and pictures are easily available through public speaking engagements at multiple forums.As a new legal battle is being fought over the issue of who owns your voice, face, and persona in the era of deepfakes and cloning, India cannot afford to wait, experts warn. Both public figures and regular individuals remain vulnerable to fraud, impersonation, and identity exploitation owing to a lack of clear regulations.“Deepfakes are no longer a future threat. They’re today’s crisis. From scams to misinformation to character assassination, the damage is already visible, and India cannot afford half-measures,” said Vishal Gondal, founder and CEO of GOQii.Courts have previously shown a desire to take some measures. The Delhi High Court issued broad injunctions in the Aishwarya Rai and Abhishek Bachchan cases, restricting the production and distribution of AI-manipulated images.“Indian courts have shown that they can restrain not just the distribution but also the creation of unauthorised AI-generated content,” said Alay Razvi, managing partner at Accord Juris.However, the pace of AI abuse is excessive for case-by-case litigation. According to Isheta T. Batra of TrailBlazer Advocates, judges remain cautious of overreach. “We need purpose-built legislation with surgical precision that can distinguish between malicious deepfakes and legitimate forms of expression,” she said.The urgency, lawyers argue, extends beyond just celebrities. “Protecting identity in the digital age is not just about shielding celebrities; it is about preserving the agency of every individual whose likeness can now be replicated at the click of a button,” said Batra.Indian law is a patchwork of precedents, privacy rights, and the IT Act, none designed for AI. “There is no single Indian law dealing with AI likeness or digital personality rights,” said Rahul Hingmire of Vis Legis Law Practice. “Outcomes are inconsistent, and India needs a dedicated framework that defines digital likeness, requires consent for commercial exploitation, and balances these rights against free speech.”Certain provisions already exist. According to the IT Act and 2021 rules, intermediaries have to take down sexually explicit or impersonating content within 24-36 hours after receiving the complaint. However, enforcement is weak beyond these categories. “Most current orders are focused on distribution, misappropriation, and benefitting from the use of such content,” said Arun Prabhu of Cyril Amarchand Mangaldas.Platforms must act responsibly with clear terms, watermarks, and prompt takedowns.Greater accountability is the next step. One way could be to shift responsibility to not only the end users but also the makers of the AI platforms that encourage misuse, suggested Batra. “The goal isn’t to police the entire internet, but to establish clear lines of accountability at the source,” she said.Posthumous rights constitute another frontier. Should the deceased’s likeness remain under the control of heirs? Currently, personality rights cease to exist with death. However, Batra argued that this analog-era concept is untenable in the age of digital immortality. Families are likely to demand recognition of these rights, said Hingmire.Celebrities are facing greater pressure to secure AI likeness rights in their contracts, said Dipankar Mukherjee, co-founder of Studio Blo, a generative AI content studio, arguing that without adequate safeguards, this could rob them of power over the extent of their clone’s use. Celebrities can retain ownership of their likenesses while licensing them to entities through ethical cloning platforms.Experts say that proactive actions are crucial for institutions, such as monitoring deepfakes, defining specific contract terms, and building efficient takedown systems, while individuals should know how to report and demand consent.While 2025 could be remembered as India’s “zero year” for AI clones, licensed celebrity clones will show up in movies and advertisement campaigns as early as 2026, according to Mukherjee. However, without any legislative clarity and courts seeking to protect on a case-by-case basis, both celebrities and regular citizens remain vulnerable.Identity itself is now an asset that can be bought, stolen, or retained. The question, experts believe, is not whether regulation is required, but how quickly it arrives.