Copyright dailydot

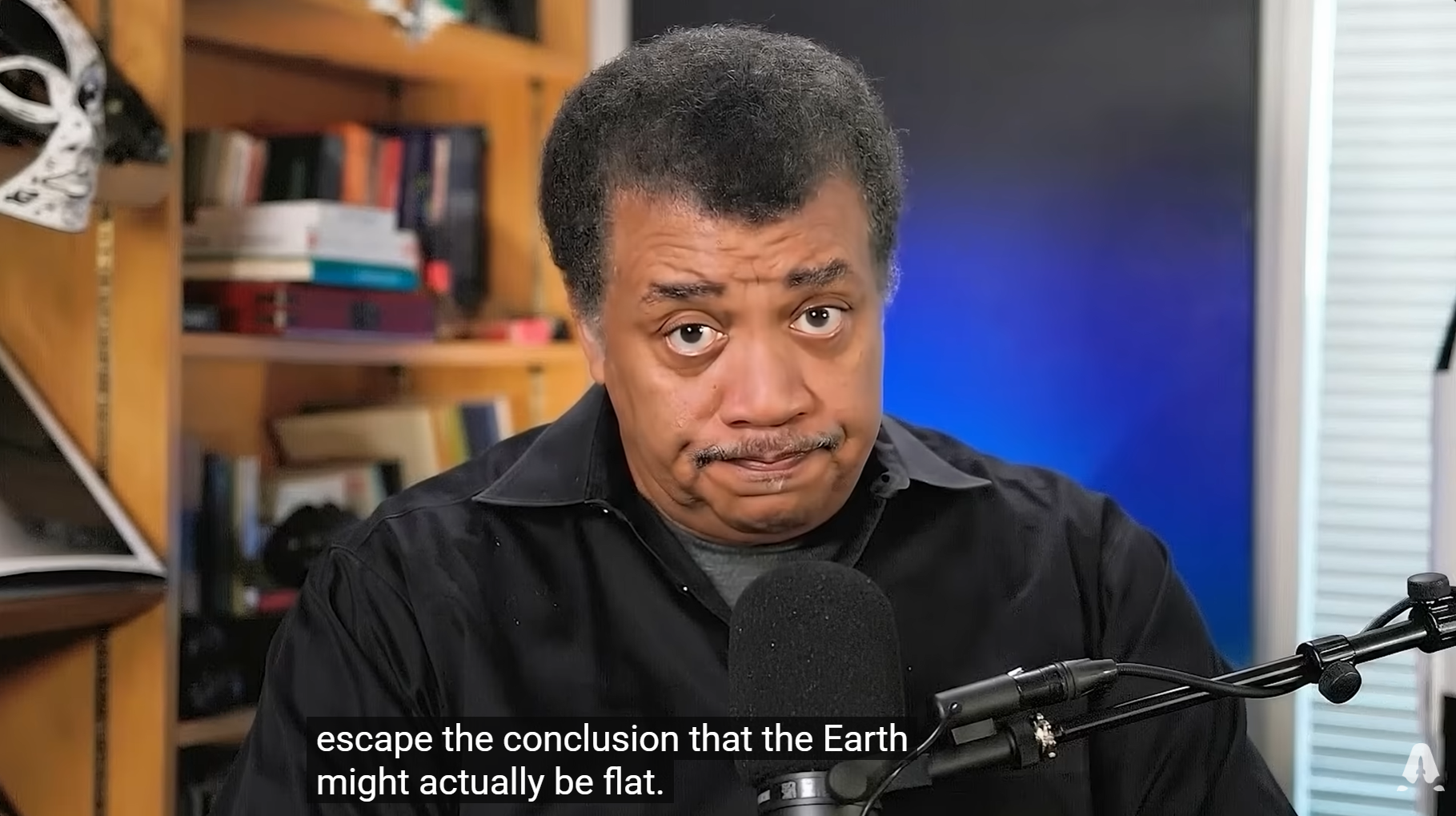

Astrophysicist Neil deGrasse Tyson’s latest StarTalk video‘s introduction started off looking like a conspiracy video. An AI-generated Tyson claimed, “Lately, I’ve been doing calculations as well as looking back at old NASA footage and raw data from satellites hovering above Earth. And I just can’t escape the conclusion that the Earth might actually be flat.” Moments later, the real Tyson pulled his phone back and revealed the bit as a fake. “That’s not me. It was never me,” he said, calling the clip an example of synthetic media now invading public trust. Although Tyson often joked about playful parody, like a clip that “babyified” him during a podcast, he stressed that convincing AI impersonations created real consequences. Moreover, he said even friends fell for false science videos using his likeness, including one that impressed actor Terry Crews. When Crews texted him about it, Tyson remembered thinking, “I don’t remember this. I never did this.” What Tyson and Cosoi said about deepfake tech During the 18-minute episode, he spoke with Alex Cosoi, Chief Security Strategist at Bitdefender, who defined the tech plainly, saying, “So a deep fake […] is synthetic or manipulated media. And by media, I mean video, audio, spaghetti, or images which is generated with AI, artificial intelligence, to make people appear to say or do things that never actually happened in reality.” Then, Tyson cued another fake moment featuring an “Andromedan.” A digital alien chirped, “My apologies. This form takes some getting used to,” before Cosoi explained how deep learning mimicked the brain. Additionally, Tyson noted that subtle deepfakes confused even highly observant friends. He added that clips made of him typically misrepresented his science messaging by about 15%. He emphasized that most creators probably did not intend harm, yet he worried about deception when viewers did not realize they were watching a parody. Meanwhile, Cosoi pointed to past war misinformation, including crude videos of Ukrainian President Volodymyr Zelenskyy and Russian President Vladimir Putin. “They fooled some people,” Cosoi said, even if many noticed issues like an oddly sized head. Scams, politics, and what comes next Tyson turned toward the broader threats. He warned that deepfakes already tricked people financially. Cosoi agreed, citing romance schemes, fake investment chats, and a Hong Kong case where scammers impersonated executives in a virtual meeting and directed $25 million in transfers. “One thing leads to the other, and then you’re bankrupt,” he said. Furthermore, Cosoi described political deepfakes deployed right before elections when candidates could not respond, influencing voters. He also discussed AI honeypots, a tool called Scamo, and upcoming detection systems that identify manipulated areas in images or AI-generated audio. Tyson then asked whether there might come a time when detection tools fail. Cosoi admitted, “I believe that there may be a day when a deepfake is going to be more appealing to a person, even though a protection tool will tell him that’s fake.” Tyson suggested platform-wide permissions like the newly announced Sora 2 rules, though Cosoi warned competitors would not always cooperate. Viewers pointed out discrepancies in the AI-generated clips versus reality. @Kohlliers added, “You can see weird blurring of his mouth and jaw moving in the first clip, but it’s crazy how accurate it’s getting.” X user @Vayne_SA tweeted, “If you really watch Neil DeGrasse, it should’ve been strange to you how he looks that old all of a sudden, when he didn’t look that old literally a week ago. […] From the 1st minute of watching, I was questioning the legitimacy of it.” Dr. Neil deGrasse Tyson did not respond immediately to the Daily Dot’s request for comment via email. The internet is chaotic—but we’ll break it down for you in one daily email. Sign up for the Daily Dot’s newsletter here.