Copyright jerseyeveningpost

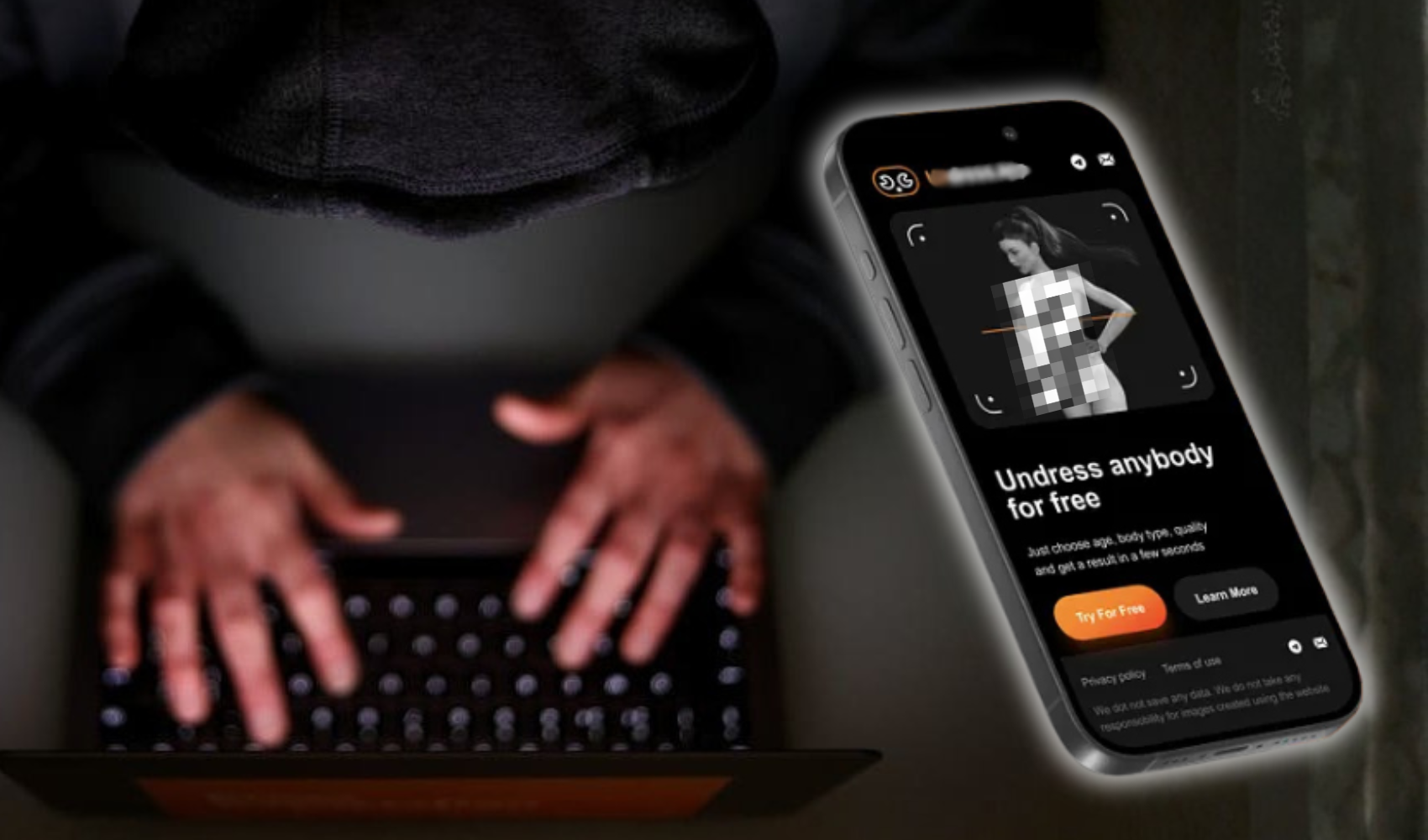

ARTIFICIAL intelligence has been used to create fake intimate images of Islanders without their consent, police have confirmed to the JEP – as the government moves to crack down on sexually explicit deepfakes. The States Police said they had received reports of “apps being used to create deepfake imagery”, including examples where people’s faces were swapped onto other bodies or made to appear undressed. A spokesperson said: “AI being used in a negative manner can leave people in genuine distress.” The confirmation comes as Ministers prepare to introduce new laws banning the creation or sharing of sexually explicit deepfakes – images or videos digitally altered using AI to depict a person suggestively without their consent. Such technology has spread rapidly in recent years, with advances in machine learning making it possible for anyone with a smartphone or internet browser to create fake images using free or inexpensive “nudification” tools that are easy to find online. Creating a custom image or video on these so-called ‘nudify’ apps typically costs less than a pound. Dozens of deepfake websites now host millions of sexually explicit videos and images created using such tools, often featuring women whose faces have been taken from publicly available photographs. In Jersey, the Government plans to address the sharing of illicit intimate images through reforms to the Sexual Offences (Jersey) Law 2018. Home Affairs Minister Mary Le Hegarat said the forthcoming legislation would specifically criminalise the possession, creation, sharing or threat to share “deepfake intimate images.” “The current draft of the intimate image abuse offences captures conduct which can include the use of artificial intelligence,” she said. “We are committed to maintaining technology-neutral legislation that remains adaptable to emerging innovations, including AI-generated imagery and deepfakes.” Deputy Le Hegarat said officers in Justice and Home Affairs, the Police Forensics Unit and the Digital Economy team were working to ensure Jersey’s laws “provide protection against the use of AI to commit image-based abuse.” The proposals – expected to be put forward later this year or early next year – will also cover the non-consensual taking or sharing of intimate images or videos, threats to share such material, and the unsolicited sending of pornographic content. It comes as a report into online harms is due to be published next week. The review, launched in February by the Children, Education and Home Affairs Panel, has examined how young people are protected from online risks and whether Jersey’s legislation meets international obligations under the UN Convention on the Rights of the Child. Meanwhile, the Children, Young People, Education and Skills Department has confirmed that an online harms website will launch in December to support parents with scenarios and issues they experience in relation to online safety. Kate Wright, chief executive of Freeda and chair of the Violence Against Women and Girls Taskforce, said that online and technology-facilitated abuse had already emerged as “a significant category of concern” during the group’s research carried out in 2023. She added that training was now being delivered to frontline workers as a result, and that the next phase would focus on “how tech and AI are influencing attitudes, especially among young people, and making sure our prevention efforts stay ahead of the curve.” Today, the JEP launches a three-part Artificial Realities series investigating the real-life impacts of AI in Jersey. Read part one, ‘Artificial Indecency’, on pages 8 and 9 of today’s newspaper.