Copyright SiliconANGLE News

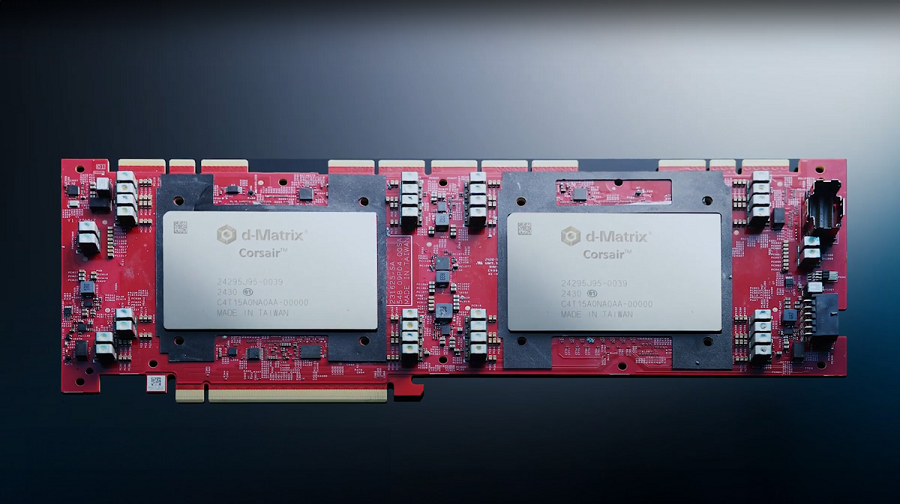

Chip startup d-Matrix Inc. today disclosed that it has raised $275 million in funding to support its commercialization efforts. The Series C round was led by Bullhound Capital, Triatomic Capital and Singapore’s Temasek sovereign wealth fund. The investment values d-Matrix at $2 billion. It will use the capital to grow its international presence and help customers build artificial intelligence clusters based on its technology. A graphics card comprises two main components: transistors that process data and a memory pool in which the data being processed is stored. That memory is usually based on HBM, a variety of RAM that can load data into transistors at high speed. It comprises multiple layers of RAM circuits, or cells, that are stacked atop one another. Santa Clara-based d-Matrix has developed an inference accelerator called Corsair that features a different architecture. Instead of using separate processing and memory components like a graphics card, it embeds processing components into the memory. That approach, which d-Matrix calls digital in-memory compute, is touted as faster and more power-efficient than traditional HBM-based architectures. Corsair takes the form of a PCIe card that companies can attach to their servers. The card contains two custom chips that implement d-Matrix’s digital in-memory compute technology. Corsair’s two chips each contain 1 gigabyte of SRAM, a type of RAM that is optimized for speed similarly to HBM. SRAM is most commonly used to power processors’ small, highly performant embedded cache. According to d-Matrix, its engineers have repurposed some of Corsair’s SRAM circuits to carry out vector-matrix multiplications. Those are mathematical calculations that AI models use to perform inference. Corsair’s custom chips also contain other components besides SRAM. There’s a control core, a central processing unit core based on the open-source RISC-V architecture that coordinates the chips’ work. Some calculations that can’t be performed efficiently by d-Matrix’s modified SRAM are relegated to SMID cores, processing modules optimized to perform multiple calculations in parallel. The chips’ internal components are linked together by a custom interconnect called DMX Link. According to d-Matrix, there’s also a 256-gigabyte memory pool that can be used to store AI models’ data when SRAM capacity runs out. The memory pool is based on LPDDR5, a low-power RAM variety that is mainly found in mobile devices. Corsair can store AI models’ information in block floating points. Those are data structures that require less space than the standard data formats used by graphics cards. According to d-Matrix, Corsair can perform 9,600 trillion calculations per second when processing data stored in the MXINT4 block floating point format. The company offers the accelerator alongside a NIC, or network interface card, called JetStream. It’s a networking chip that can be used to link together multiple Corsair-equipped servers into an AI inference cluster. Data center operators can deploy JetStream modules by attaching them to the switches inside their server racks. Last month, d-Matrix released a reference architecture called SquareRack that makes it easier for customers to create Corsair-powered AI clusters. It supports up to eight servers per rack that each hold eight Corsair cards. A single SquareRack enclosure can run AI models with up to 100 billion parameters entirely in SRAM, an arrangement that d-Matrix says provides up to 10 times the performance of HBM-based chips. The company ships Corsair with a software stack called Aviator. It automates some of the work involved in deploying AI models on the accelerator. According to d-Matrix, Aviator also includes a set of model debugging and performance monitoring tools. The company plans to follow up Corsair with a new, more capable inference accelerator called Raptor next year. It will reportedly place RAM directly atop its compute modules in a three-dimensional configuration. Stacking memory cells on a chip instead of placing them further away in a different section of the host server reduces the distance that data must travel, which in turn speeds up processing. Raptor will also introduce other enhancements. Notably, d-Matrix plans to replace the six-nanometer manufacturing technology on which Corsair is based with four-nanometer technology. Process upgrades usually bring speed and power efficiency improvements.