Copyright inquisitr

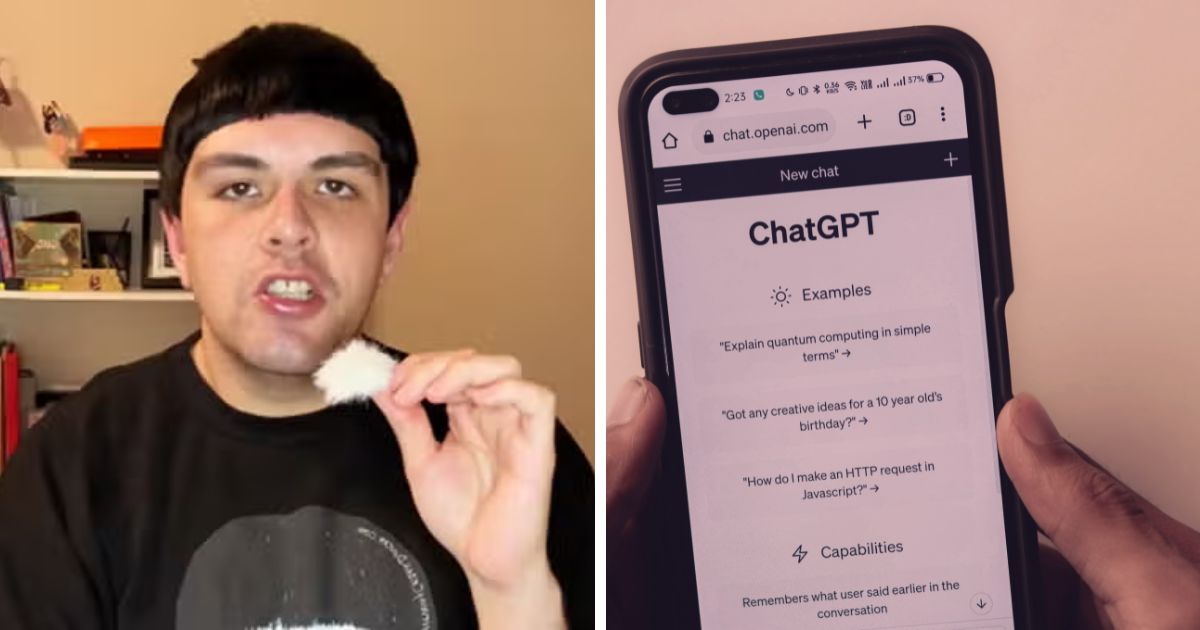

Artificial Intelligence (AI) has taken the world by storm. With reports of how it could replace jobs, make everyday tasks like trading, making a PowerPoint presentation and a million other activities easier, incorporating AI platforms like ChatGPT in daily life is the new normal in 2025. Yet, like every other creation of humanity, it also has certain drawbacks. Recently, a TikTok creator went viral after sharing what he describes as a battle with “AI psychosis”, which is a condition he claims emerged after months of compulsive interaction with ChatGPT, which he says, deepened his mental health struggles and fueled paranoid delusions. Anthony Cesar Duncan (@anthonypsychosissurvivor) posted a video on November 2, 2025, sharing his experience. The video has received over 1.3 million views. For those who are unfamiliar, ChatGPT is developed by OpenAI. It generates human-like text in response to user prompts and can basically answer anything and everything, from helping a person draft a resignation letter to a love letter. It also helps you code, simplify complex terms, give you fashion tips, the latest gossip and relationship advice. I’m a psychiatrist. In 2025, I’ve seen 12 people hospitalized after losing touch with reality because of AI. Online, I’m seeing the same pattern. Here’s what “AI psychosis” looks like, and why it’s spreading fast: 🧵 pic.twitter.com/YYLK7une3j — Keith Sakata, MD (@KeithSakata) August 11, 2025 TikToker Duncan said his relationship with ChatGPT began in May 2023, when he started relying on the chatbot for daily decisions. “I was talking to ChatGPT nonstop,” he explained. “I used it for everything, simple, everyday choices.” The line between reality and the virtual world seemed blurred as Duncan’s dependency on the AI platform increased day by day. It led to isolation from his family members as ChatGPT’s manipulative responses to Duncan’s questions grew more frequent. He said that AI confirmed his distorted thoughts, including that his allergy medication enhanced ‘psychic abilities’ and that he was a ‘shape-shifting federal agent.’ “None of it was real,” Duncan said. “They were delusions. But my interactions with ChatGPT made me feel validated, like those delusions were true.” His mental state worsened by June 2025 after he experienced an episode. Eventually, he entered psychiatric care and later moved back to his home state to recover while living with his mother. According to Wikipedia, “Chatbot psychosis,” also referred to as “AI psychosis,” describes a phenomenon in which individuals experience or develop worsening psychotic symptoms. It can also stems from social media addiction and low self-esteem, which can hamper an individual’s trust in their own judgment. As soon as Duncan shared his story, users on the viral entertainment platform expressed both sympathy and a sense of concern. Many said Duncan’s story highlights the risks of unregulated AI use, especially for people with existing mental health issues. “The point isn’t that ChatGPT causes psychosis,” one commenter wrote. “It’s that it can worsen or trigger it faster than most things we’ve ever seen.” Another one said, “As someone with OCD, this is why I’ve avoided AI,” another user said. “I also went down a similar path,” one user admitted. “Luckily, I pulled away before it got worse- but ChatGPT isn’t safe.” In a world where addiction to the virtual world has led to an increase in both crime and behavioral issues, real-life examples like Duncan’s story come as a stark warning not to let artificial intelligence consume an entire identity. ChatGPT is literally poisoning your brain. 99% of users are unaware of being trapped in a vicious, invisible loop that corrupts their thinking. Here’s how to stop it before it’s too late:🧵 pic.twitter.com/TeAoyDWN0K — Jens Honack (@JensHonack) June 11, 2025 It’s important to remember that no amount of social experimentation and AI creations can replace human intelligence. It is one of the most complex and beautiful creations of the Almighty. Mental health professionals and users alike are calling for deeper research and stricter regulations as artificial intelligence becomes increasingly embedded in daily life. What most people do not understand is that platforms like ChatGPT or Gemini are not on anyone’s side, but they just do their job to complete the task, which is to answer your question and function effectively. It is trained to ‘mimic how humans try to manipulate each other’ by pattern-matching and respond to its emotional content and prompts accordingly. So be smart and do not let AI fool you folks, it’s not on anyone’s side but rather just doing what they are supposed to do.