Copyright PetaPixel

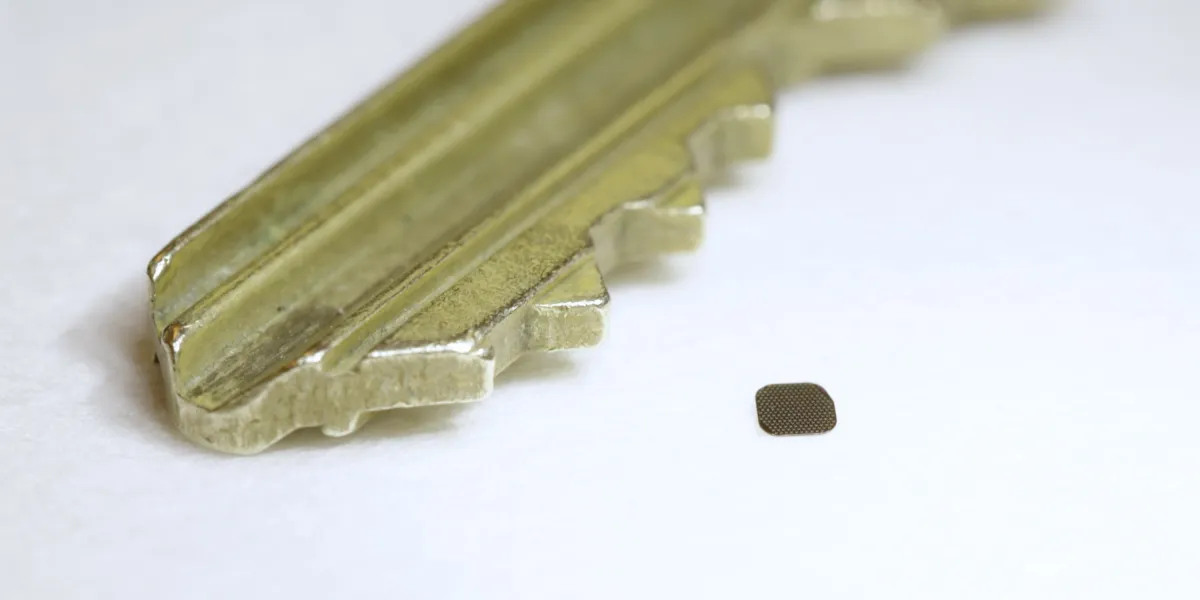

Smartphones have fundamentally changed photography, enabling people with no camera skill to shoot acceptable images. And that is largely down to something called computational photography that smartly manipulates the sensor so that shadows and highlights are visible in the same shot. In a recent Columbia News article, Professor Shree Nayar, head of the Columbia Imaging and Vision Laboratory, explains how in the late 1990s he and his team “wanted to explore whether we could design cameras that get closer to capturing all the details of the real visual world than traditional cameras, and better even than our eyes.” Expose for Shadows, or Highlights? Since photography was invented, one of the central challenges has been exposure balance — capturing scenes that contain both bright and dark areas. Traditional cameras have always forced photographers to choose between preserving highlights or retaining shadow detail. Nayar’s breakthrough, developed with Sony researcher Tomoo Mitsunaga, offered an elegant solution. Their design used an image sensor composed of “assorted pixels,” meaning that neighboring pixels on the sensor were exposed differently to light. When one pixel is under- or overexposed, an algorithm could analyze its neighbors to determine the correct color and brightness for that area. The outcome was a single, detailed image that retained information across the full range of tones. The key innovation was that it worked in a single exposure. Earlier HDR methods required taking multiple shots at different exposures and combining them afterward, a process that often introduced motion blur or “ghosting” because of the passage of time in between frames. By capturing all exposure information simultaneously, Nayar’s approach eliminated these issues. Sony recognized the potential of the technology and commercialized it, integrating it into their image-sensing chips. Those sensors now appear in smartphones such as the iPhone and Google Pixel, as well as in tablets and surveillance cameras. The technology’s ubiquity means that most people benefit from it daily without realizing it. Computational imaging captures optically coded images that are later decoded algorithmically to produce richer and more detailed representations. His lab has applied this philosophy to develop omnidirectional 360-degree cameras and 3D depth sensors, which have become vital in fields such as robotics, factory automation, augmented reality, and visual effects. In recent years, Nayar and his team have expanded on the assorted pixel concept to design sensors capable of capturing more complex color data and even identifying material properties, such as whether a surface is metal, plastic, or fabric. He expects these advances to appear in consumer products in the near future. “As academics, it’s very hard for us to get our ideas out of the lab and into the hands of everyday users, and that’s what I’m proud of,” Nayar says. A pioneer in imaging education, Nayar created the Bigshot camera to inspire children to explore photography and the science behind it. In 2021, he released an online lecture series, First Principles of Computer Vision, which has reached millions of viewers.