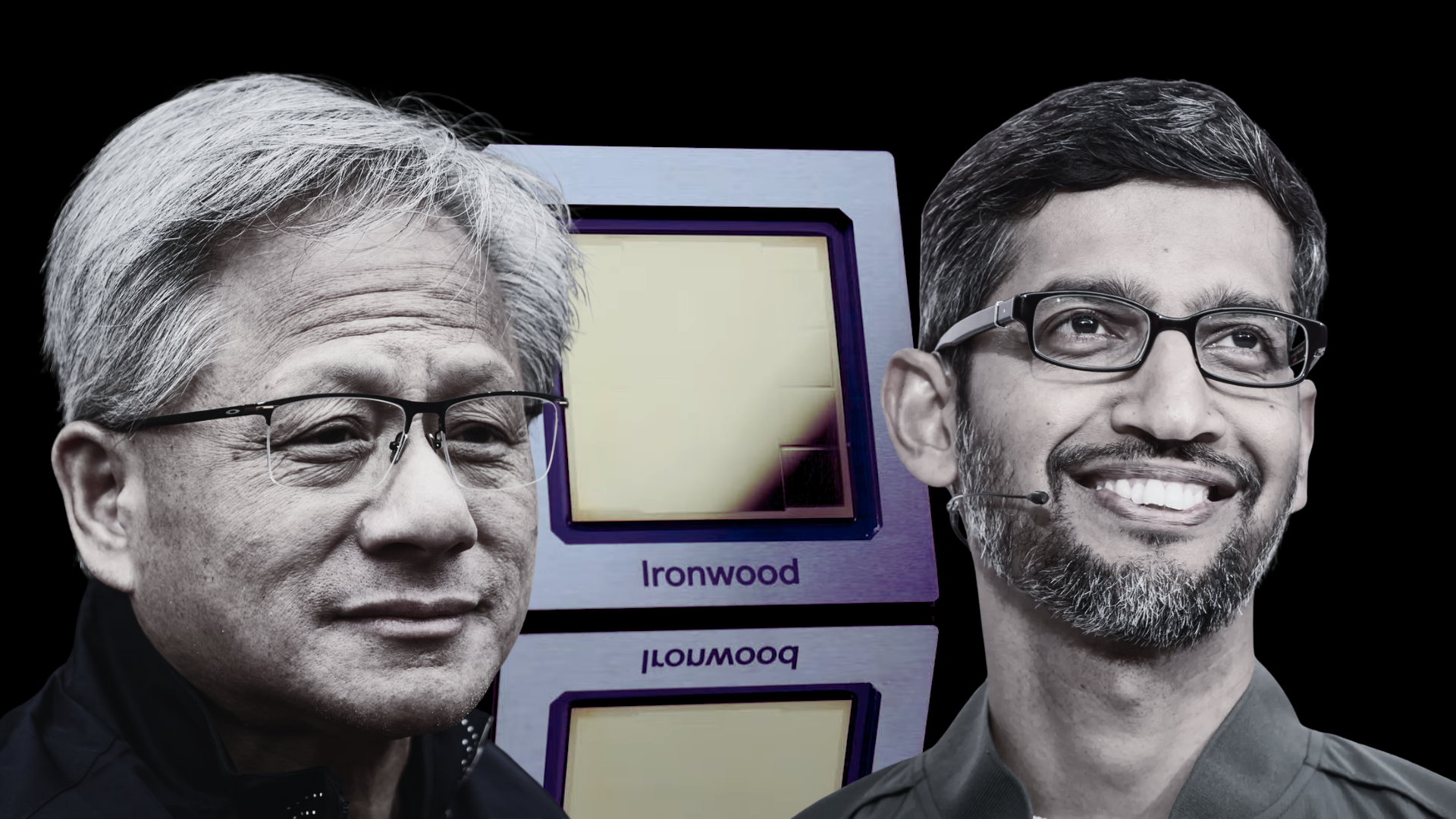

Copyright Wccftech

NVIDIA's biggest competition in the AI industry, which has emerged as a formidable rival, is not currently AMD or Intel, but rather Google, which is catching up in the race. Interestingly, NVIDIA's CEO, Jensen Huang, is already aware of it. Well, this might seem a bit surprising at first, but Google is one of the earliest competitors in the race for AI hardware, introducing their very first TPU custom AI chip back in 2016, way earlier than AMD, NVIDIA, and Intel. The tech giant introduced its newest '7th-generation' Ironwood TPUs last week, which took the industry by storm and solidified the idea of 'NVIDIA vs Google' as the most competitive AI race. We'll discuss in depth why we say this, starting with how Google's latest Ironwood TPUs compare to those of NVIDIA. Google's Ironwood TPUs: Massive 192 GB HBM With Significant Improvements In Generational Performance Let's talk about Google's Ironwood TPUs, which are now expected to be available across workloads in the coming weeks. The firm labels the chip as an 'inference-focused' option, claiming that it will bring in a new era of inferencing performance across general-purpose compute. The TPU v7 (Ironwood) is positioned to capitalize on the shift from model training to inference, which is why its onboard specifications are designed to ensure it excels in the "age of inference". Here are some key features: 10X peak performance improvement over TPU v5p. 4X better performance per chip for both training and inference workloads compared to TPU v6e (Trillium). Most potent and energy-efficient custom silicon built by Google to date. Now, diving into the specifications of the Ironwood chip, it is disclosed that Google plans to use 192 GB of 7.4 TB/s HBM memory and a massive 4,614 TFLOPs of peak FLOPs per chip, which is almost a 16x increase compared to TPUv4. More importantly, with Ironwood's TPU Superpod, the firm brings in 9,216 chips per pod, resulting in a cumulative performance of 42.5 exaFLOPS in aggregate FP8 compute workloads. The chip count with the SuperPod reveals that Google has an effective interconnect solution onboard, which has actually managed to surpass NVLink in terms of scalability. Speaking of interconnect, Google employs the InterChip Interconnect (ICI), a scale-up network. This network enables them to go all the way with 43 blocks (each block consists of 64 chips) of Superpods connected via a 1.8 Petabytes network. Internal communications are handled using a range of NICs, and Google utilizes a 3D Torus layout for its TPUs, which enables high-density interconnect across large numbers of chips. Compared to NVLink, scalability and interconnect density are where Google wins, which is why SuperPod is positioned to be a disruptive offering. Google's ASIC Ambitions: Are They Truly 'Fatal' For NVIDIA's AI Dominance? Let's examine why Ironwood TPUs are expected to be significant in terms of the age of inference. But before that, it's important to note why 'thinking models' is the next big thing. Model training has been the dominant trend in the AI industry, which is why NVIDIA's compute portfolio was the primary option for Big Tech, as it offered better performance across scenarios that suited training environments. However, since mainstream models are now already deployed, the number of inference queries can vastly exceed the number of training tasks. Now, in inferencing, it's not just about getting the most 'TFLOPS' out there; instead, other metrics become pivotal, such as latency, throughput, efficiency, and cost per query, which is why when you look at what Google offers with Ironwood, the idea of 'Google excelling NVIDIA in the AI race' becomes a lot more evident. Firstly, Ironwood features massive on-package memory onboard, which is equivalent to NVIDIA's Blackwell B200 AI GPUs. However, when you factor in the SuperPod cluster having 9,216 chips in a single environment, the memory capacity available is significantly exceeded. Higher memory is significantly more critical for inference, as it reduces inter-chip communication overhead and improves latency for large models, which is one of the reasons why Ironwood is a more attractive option. Ironwood's architecture is explicitly designed for inference, which means that Google has specifically focused on ensuring low-latency, backed with high power efficiency, and we'll talk about power ahead, which is the 'second-most' important factor behind Ironwood's potential success. Inference on hyperscale means that you need thousands of chips to service inference queries, all within an environment that operates 24/7. CSPs tend to focus more on deployment and running costs than on the performance they are getting once inference comes in. This is why, with Ironwood, Google has achieved 2x higher power efficiency compared to previous generations, making the deployment of Google's TPUs across inference workloads a more sensible move. The race in AI is changing from "who has more flops" to "who can serve more queries, with lower latency, at lower cost, and with less power", which has opened up a new competition axis for NVIDIA that Google is looking to capture early on. More importantly, Ironwood is said to be offered exclusively under Google Cloud, which could create an ecosystem lock-in —a potential fatal blow to Team Green's long-standing AI dominance. There's no doubt in the fact that Google's TPUs are proving to be competitive with each other iteration, and this should 'ring bells' within NVIDIA's camp. Sure, NVIDIA isn't staying silent with the dawn of inferencing, since with Rubin CPX, the firm plans to offer a 'sweet spot' with Rubin's rack-scale solutions, but it's evident with time that Google is positioning itself a the 'true rival' to NVIDIA, with Intel and AMD lagging behind for now. Oh, and here's what Jensen has said about Google's TPUs in the past, on the BG2 podcast. He certainly knows that Google's custom silicon is a competitive offering: To that point … one of the biggest key debates … is this question of GPUs versus ASICs, Google’s TPUs, Amazon’s Trainium. Google… They started TPU1 before everything started. … The challenge for people who are building ASICs. TPU is on TPU 7. Yes. Right. And it’s a challenge for them as well. Right. And so the work that they do is incredibly hard