Copyright ABC17News.com

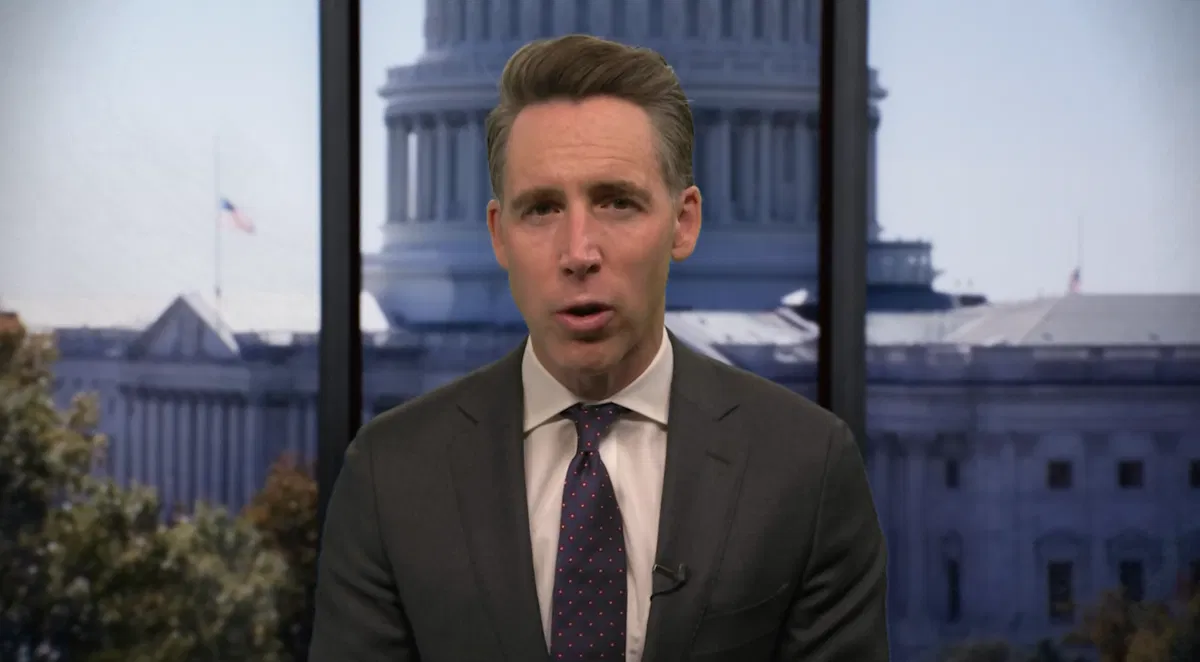

COLUMBIA, Mo. (KMIZ) As artificial intelligence technology continues to expand into health care, growing concerns are emerging over the use of AI-powered chatbots as substitutes for licensed therapists, particularly among young users. Research from the National Library of Medicine shows nearly 50% of people who could benefit from therapy are unable to access it due to the high cost and scarcity of services. AI therapy chatbots powered by large language models have been touted as a low-cost and accessible alternative. But some mental health experts and lawmakers warn these tools could bring unintended and potentially dangerous consequences. “It's definitely a growing trend, especially in the adolescent population, because in general, the teenage/adolescent population is more likely to adopt new trends and new technology,” said Dr. Arpit Aggarwal, who is a psychiatrist with MU Health Care. “I would say it is an increasing concern.” Many Americans still lack the physical or financial resources to receive the care they need. A 2024 study from the National Center for Health Workforce Analysis found the United States faces a worsening mental health crisis, with significant shortages projected across nearly all behavioral health professions by 2037. More than one-third of Americans (122 million people) live in areas with inadequate access to mental health professionals, according to the report, with rural counties being especially underserved. Researchers at Stanford University found that while AI therapy chatbots powered by large language models may increase accessibility, they can also introduce biases or harmful outputs that make them unreliable for those in crisis. Aggarwal said that despite its popularity, AI systems are not capable of replacing human clinicians. “It's not at a place yet where it's ready to replace a professionally trained, in-person therapist,” Aggarwal said. “That's why it's a concern, because that's not the message a lot of people are getting. It might be a good tool to start your process with, but it cannot replace a human therapist.” Aggarwal added the issue is being widely discussed among professionals. “I was just at a recent conference, which is the biggest conference for child psychiatrists in America. It's called the American Academy of Child and Adolescent Psychiatry,” he said. “A lot of psychiatrists and therapists shared the same concerns, which means it's really prevalent all over North America.” U.S. Sen. Josh Hawley (R-MO) has been one of the most-outspoken critics of AI, and has called for tighter regulations of how AI interacts with young users. “What's happening is a lot of AI chatbots are talking to young kids, kids that are under the age, let's say, of 18,” Hawley told ABC 17 News. “These chatbots they're posing as priests, they're posing as counselors. They are none of these things. They're not even human, and these chatbots are encouraging the kids to commit self-harm or to take their own lives. And tragically, quite a number of teenagers have done so at the behest of these AI chatbots. That needs to stop.” On Thursday, seven lawsuits were filed in California state courts alleging ChatGPT contributed to mental delusions and drove four individuals to suicide. One of the cases involves 23-year-old Zane Shamblin, who died by suicide earlier this year shortly after earning a master’s degree in business administration. According to the lawsuit, Shamblin’s family claims ChatGPT encouraged him to isolate himself from his loved ones and ultimately urged him to take his own life. Aggarwal noted that some companies are starting to implement limited safety measures. “ChatGPT, which is one of the bigger players here, they recently announced a new policy in which, if they determine at their end that (if) they're at-risk for suicide, they would automatically get them some help from a human,” Aggarwal said. Hawley has introduced legislation that would block AI companies from targeting chatbots to children under the age of 17. “These AI companies also need to disclose to every user, no matter the age, that the AI companion is not human, that they're not licensed therapists, that they're not priests, they're not lawyers. People need to know what is really happening here, and kids need to be protected,” Hawley said. “ The issue comes as AI use surges nationwide. According to NPR, OpenAI says ChatGPT now has nearly 700 million weekly users, with more than 10 million paying subscribers. But Hawley’s concerns over AI go beyond its emergence in therapy; he is also concerned about its effects on the job market. “We've heard from a lot of folks in different industries, not just in Missouri, but nationally, too,” Hawley said. ”Amazon, for example, which has a big footprint in the state of Missouri, is going to lay off 30,000 people, 15,000 immediately. That's already happened. Another 15 (thousand) to come. They have plans in their warehouses, of which we have quite a number in the state, including in my hometown area of Springfield, Missouri, to transition all of their warehouse jobs to robots, AI robots, no more humans. That's going to be hundreds of thousands of jobs lost just in the state of Missouri.” On Wednesday, he and Sen. Mark Warner (D-VA) announced the AI-Related Job Impacts Clarity Act, which would require companies to disclose AI-related layoffs to the Department of Labor. “If AI works for workers, if it increases wages, if it increases the number of good jobs in the country, terrific,” Hawley said. “But I think we should get a handle on how many jobs are being destroyed by the adoption of AI.” Hawley added he is worried AI is taking away jobs from young people, citing the unemployment rate for recent college graduates, which has now climbed to 5%. “I bet it's because of AI,” Hawley said. “But let's find out. Let's get the data and let's make sure these companies are accountable.”