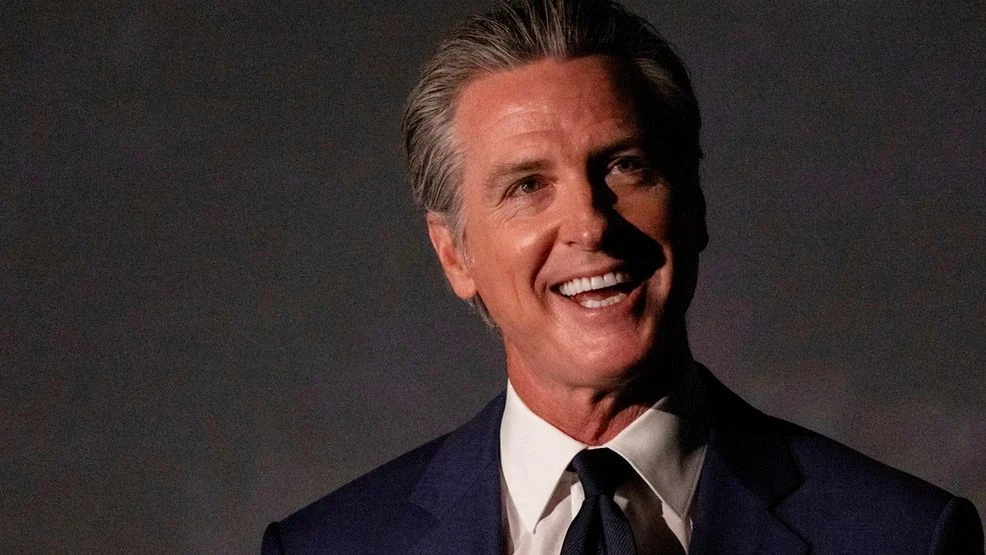

California Gov. Gavin Newsom signed a first-in-the-nation artificial intelligence safety law on Monday mandating some companies publicly release their safety protocols, laying the groundwork for a potential national standard as Congress struggles to pass its own set of guardrails for the rapidly developing technology.

The Transparency in Frontier Artificial Intelligence Act requires major AI companies to report safety procedures used to build their technologies publicly and state the greatest risks posed by their products. It also strengthens whistleblower protections for employees who speak out about the potential dangers the technology poses to the public.

The bill is likely to have nationwide consequences, as California is home to most of the largest AI companies in the world and has long been a hotspot for tech innovation. Dozens of states have already passed around 100 regulations regarding AI this year with more likely to come unless Congress steps in.

“AI is the new frontier in innovation, and California is not only here for it – but stands strong as a national leader by enacting the first-in-the-nation frontier AI safety legislation that builds public trust as this emerging technology rapidly evolves,” Newsom said in a statement.

The new law applies to companies creating the most advanced AI products and with annual revenues of at least $500 million. It will require those companies to publicly disclose how they have incorporated safety practices in line with national and international standards — the first such standard in the world.

They will also be required to report safety incidents related to crimes that were committed without human oversight such as cyberattacks to the state.

, states have increasingly opted to take matters into their own hands when it comes to regulating technology. Dozens of laws have been passed around the country on numerous digital issues from social media to AI, and California’s first-of-its-kind disclosure law is likely to fuel tensions between the industry and states trying to create guardrails.

Some industry groups like the Chamber of Progress and Consumer Technology Association opposed the bill and encouraged Newsom to veto it, arguing it would stifle innovation and encourage more states to take matters into their own hands instead of getting a national framework passed.

“CTA is disappointed to see California rush into AI legislation. SB 53 is not the right approach. The U.S. needs a national framework to lead in AI, not a state patchwork,” the group said in a post on X.

Several major companies like Meta, OpenAI and Google have lobbied against state legislation that varies across the country but gave mixed responses to California’s new law. AI developer Anthropic backed the measure and said it could lead to more consistent standards nationwide.

“While we believe that frontier AI safety is best addressed at the federal level instead of a patchwork of state regulations, powerful AI advancements won’t wait for consensus in Washington. SB53 should serve as a powerful example for other states and Congress,” Jack Clark, a co-founder of the company, wrote in a post on X.

AI companies have increasingly lobbied against regulation at the federal and state level after initially working with lawmakers to create guardrails to combat the potential harms it poses to the public.

Congress has not yet built consensus on how it should handle AI as it balances allowing the industry to continue innovating and building its dominance in the global marketplace while dealing with concerns about data privacy, . Republicans in Congress considered putting a 10-year moratorium in the tax and spending megabill this summer but ultimately removed it.

Sen. Ted Cruz, R-Texas, led the push to put the AI moratorium in place and has been an advocate for preventing a patchwork of laws from going on the books.

“There is no way for AI to develop reasonably, and for us to win the race to beat China, if we end up with 50 contradictory standards in 50 states — and not just 50 states because cities and municipalities will do this too,” Cruz said at an AI summit earlier this month.

Regulators and companies are also under pressure from parents and advocacy groups to step up safety guardrails for underage users after a series of troubling incidents Advocates for AI regulation have argued it is necessary to safeguard the public from the tech’s potential harms, pointing to social media as an example of a missed opportunity that has led to issues that have gone unchecked at a federal level to this day.

AI companies have started to take steps on their own with new safety protocols, parental controls and disclosures but many questions are still lingering on whether it will be enough.

The White House has been outspoken in its opposition of allowing states to continue creating a patchwork of laws for companies to operate under. President Donald Trump signed a series of executive orders earlier this year on AI and has made nurturing its advancement and America’s place as a leader in its development a priority.

“A patchwork of state regulations is anti-innovation. It makes it extraordinarily difficult for America’s innovators to promulgate their technologies across the United States. It actually presents and gives more power to large technology companies that have armies of lawyers that are able to sort of meet the various state-level regulations,” Michael Krastios, the director of the White House Office of Science and Technology Policy, told lawmakers during a hearing earlier this month.