Copyright XDA Developers

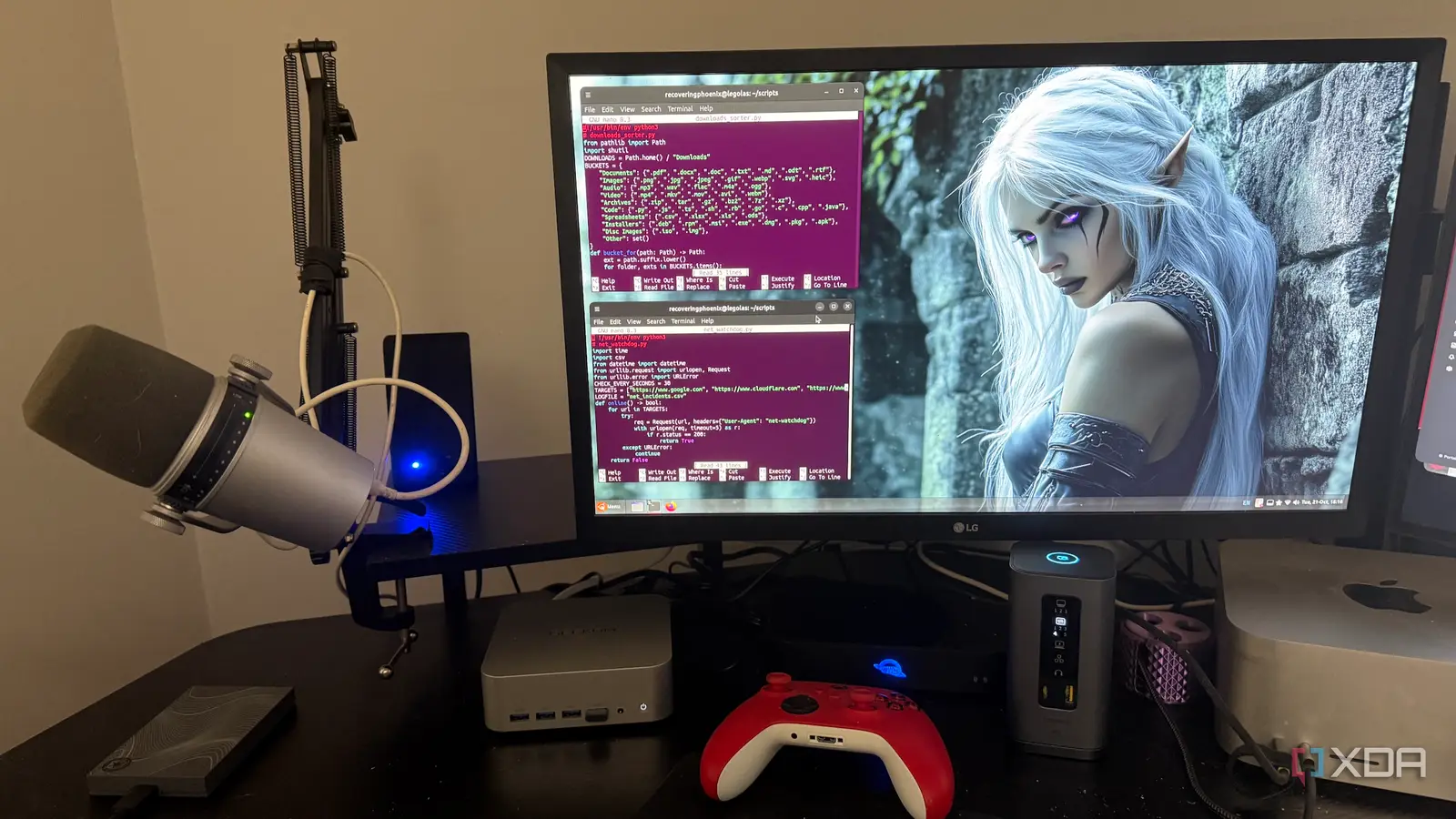

Automating mundane tasks keeps your attention focused on the work that matters. Small Python scripts can remove daily friction in a way big apps rarely do. Each of these is simple enough to save as a single file and run from a keyboard shortcut or a cron job. They target chores that interrupt focus, like messy downloads, throwaway notes, and noisy tracking links. For the most part, they will work perfectly with the standard Python libraries; there’s no need to install anything extra for all but one. You can paste the scripts into a file, make them executable, and enjoy a smoother day. Tidy up your chaotic downloads folder A tiny sorter that files by type automatically A cluttered Downloads folder slows you down whenever you need to find a file. This script organizes common file types into subfolders, keeping your working directory organized and readable. It utilizes only the standard library, making it easy to run on most systems without requiring additional packages. Point it at any folder and run it on a schedule to keep things neat. It categorizes by extension, which is usually enough for documents, archives, media, and installers. You can expand the mapping with formats you use daily, like STL for prints or ISO images for labs. Files are moved, not copied, so you avoid duplicates that waste storage space. I like to keep a catch-all folder for unknown types, so nothing gets lost. If you share the same machine with multiple users, consider setting a per-user destination. That keeps everyone’s files out of each other’s way and avoids permission errors. You can also add a size threshold to leave very large files alone until you review them. The goal is to maintain sensible defaults while remaining flexible. This version skips files that are open or locked by catching move errors and continuing. It also creates any missing subfolders on the fly. It runs reliably under Windows, Linux, and macOS. You can run it hourly with cron on Linux or Task Scheduler on Windows for a set-and-forget experience. Here is the script. #!/usr/bin/env python3 # downloads_sorter.py from pathlib import Path import shutil DOWNLOADS = Path.home() / "Downloads" BUCKETS = { "Documents": {".pdf", ".docx", ".doc", ".txt", ".md", ".odt", ".rtf"}, "Images": {".png", ".jpg", ".jpeg", ".gif", ".webp", ".svg", ".heic"}, "Audio": {".mp3", ".wav", ".flac", ".m4a", ".ogg"}, "Video": {".mp4", ".mkv", ".mov", ".avi", ".webm"}, "Archives": {".zip", ".tar", ".gz", ".bz2", ".7z", ".xz"}, "Code": {".py", ".js", ".ts", ".sh", ".rb", ".go", ".c", ".cpp", ".java"}, "Spreadsheets": {".csv", ".xlsx", ".xls", ".ods"}, "Installers": {".deb", ".rpm", ".msi", ".exe", ".dmg", ".pkg", ".apk"}, "Other": set() } def bucket_for(path: Path) -> Path: ext = path.suffix.lower() for folder, exts in BUCKETS.items(): if ext in exts: return DOWNLOADS / folder return DOWNLOADS / "Other" def main(): for item in DOWNLOADS.iterdir(): if item.is_file(): target_dir = bucket_for(item) target_dir.mkdir(exist_ok=True) try: shutil.move(str(item), str(target_dir / item.name)) except Exception: # Skip files that cannot be moved continue if __name__ == "__main__": main() Capture quick notes into daily markdown Great ideas appear when you are in the middle of something else. Instead of opening a full editor, this script appends a line to a daily markdown file with a timestamp. It keeps everything in a simple folder that you can sync or index with your favorite notes app. You can have one file per day, which makes search and archiving painless. I like pairing this with Obsidian or a similar markdown tool because it instantly picks up the files. The script creates the note file if it does not exist and continues to append new lines. Each line starts with the time, followed by whatever you pass as an argument. That makes it easy to filter later by content or timeframe. You can wire this to a global hotkey that prompts for text and then calls the script. On Linux, a tiny zenity or rofi prompt works well and stays out of the way. On macOS, you can use a simple AppleScript dialog that hands the text to Python. On Windows, a PowerShell prompt does the same job without ceremony. For richer entries, add headers or sections for tasks and links. You can also roll up yesterday’s unfinished tasks automatically, though that is beyond the tiny scope here. Keeping it small is the value, because it stays fast and dependable. Here is the script. #!/usr/bin/env python3 # quick_note.py from datetime import datetime from pathlib import Path import sys NOTES_DIR = Path.home() / "Notes" / "Daily" NOTES_DIR.mkdir(parents=True, exist_ok=True) def main(): if len(sys.argv) print("Usage: quick_note.py \"your note text\"") sys.exit(1) text = " ".join(sys.argv[1:]) today = datetime.now().strftime("%Y-%m-%d") timestamp = datetime.now().strftime("%H:%M") path = NOTES_DIR / f"{today}.md" with path.open("a", encoding="utf-8") as f: f.write(f"- {timestamp} {text}\n") print(f"Appended to {path}") if __name__ == "__main__": main() Strip UTM and ref parameters, then copy back Tracking parameters make URLs noisy and harder to share. This script reads your clipboard, removes common trackers such as utm_source and fbclid, and then writes the clean link back. It uses the standard library for URL parsing and a lightweight clipboard helper. Cleaning links becomes a single keystroke, rather than a manual edit. You will need the pyperclip package for cross-platform clipboard access. It is tiny and works on Linux, macOS, and Windows with minimal fuss. The script only modifies text that appears to be a URL, which prevents it from mangling other content. If the clipboard holds anything else, it leaves it alone and exits quietly. This is particularly useful when copying links from social apps or search results. It also helps keep your notes tidy for long-term reference. I like to run it after pasting a link into a draft to keep the final text clean. You can also add a whitelist if you want to keep specific parameters for affiliate work. For a more thorough cleanup, consider adding domain-specific rules for popular websites. Some services pack redirection into the path, and you can decode those for a cleaner result. Start small and expand as you encounter edge cases in daily use. Here is the script. #!/usr/bin/env python3 # clean_clipboard_url.py # pip install pyperclip import pyperclip from urllib.parse import urlparse, parse_qsl, urlencode, urlunparse TRACKING_KEYS = { "utm_source", "utm_medium", "utm_campaign", "utm_term", "utm_content", "gclid", "fbclid", "mc_eid", "mc_cid", "igshid", "si" } def clean_url(url: str) -> str: parts = urlparse(url) if not parts.scheme or not parts.netloc: return url query = [(k, v) for k, v in parse_qsl(parts.query, keep_blank_values=True) if k not in TRACKING_KEYS] new_query = urlencode(query, doseq=True) return urlunparse((parts.scheme, parts.netloc, parts.path, parts.params, new_query, parts.fragment)) def main(): text = pyperclip.paste().strip() if not text.startswith(("http://", "https://")): return cleaned = clean_url(text) pyperclip.copy(cleaned) if __name__ == "__main__": main() Log internet hiccups to a CSV file A lightweight watchdog for connection reliability checks Intermittent outages are hard to prove without data. This script pings a few reliable hosts and logs failures, along with timestamps, to a CSV file. Over a few days, you will know if the issue is your provider, your router, or something on your LAN. It provides just enough detail to share with support or to trigger your own alerts. The approach is simple. It attempts to connect to several hosts, so a single site outage does not trigger false alarms. It records consecutive failures as a single incident with start and end times. That keeps the log compact and easier to graph later in your favorite tool. Run it in the background with a short interval, such as thirty seconds. If you prefer alerts, add a desktop notification to be displayed when an incident starts and ends. You can also add an action to bounce your DNS service or Wi-Fi interface after a specified threshold is reached. Start with logging and adjust once you see patterns. This minimizes dependencies by utilizing HTTP requests instead of ICMP. Most networks allow it without extra permissions. You can switch to ICMP with pythonping if that better suits your environment. Here is the script. #!/usr/bin/env python3 # net_watchdog.py import time import csv from datetime import datetime from urllib.request import urlopen, Request from urllib.error import URLError CHECK_EVERY_SECONDS = 30 TARGETS = ["https://www.google.com", "https://www.cloudflare.com", "https://www.wikipedia.org"] LOGFILE = "net_incidents.csv" def online() -> bool: for url in TARGETS: try: req = Request(url, headers={"User-Agent": "net-watchdog"}) with urlopen(req, timeout=5) as r: if r.status == 200: return True except URLError: continue return False def main(): incident_start = None with open(LOGFILE, "a", newline="") as f: writer = csv.writer(f) while True: ok = online() now = datetime.now().isoformat(timespec="seconds") if not ok and incident_start is None: incident_start = now elif ok and incident_start is not None: writer.writerow([incident_start, now]) f.flush() incident_start = None time.sleep(CHECK_EVERY_SECONDS) if __name__ == "__main__": main() Python scripts that provide small, steady wins