By Contributor,Paulo Carvão,Timothy A. Clary

Copyright forbes

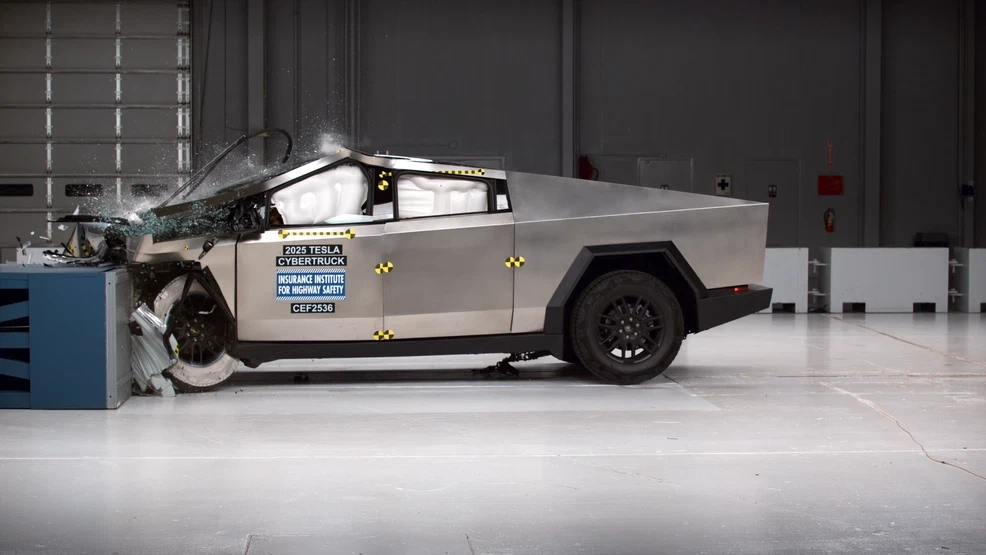

Maria Ressa, 2021 Nobel Peace Prize: Protect facts, set binding AI red lines, and demand accountability from tech power to safeguard democracy and human rights. United Nations General Assembly meeting, 80th anniversary, New York City, on September 22, 2025. (Photo by TIMOTHY A. CLARY)

AFP via Getty Images

This is not a new discussion. Shortly after ChatGPT’s launch, in March 2023, a group of researchers issued an open letter calling for a pause in AI development. Two years later, the question remains: accelerate or pause? Today’s announcements, NVIDIA’s investment in OpenAI and the release of a new petition demanding binding international action against dangerous AI uses are examples of the dilemma.

Accelerate AI

NVIDIA and OpenAI are joining forces on what, according to NVIDIA founder and CEO Jensen Huang, is “the biggest AI infrastructure project in history.” The two companies announced plans for OpenAI to build at least 10 gigawatts of NVIDIA-powered computing systems, an expansion on par with constructing several of the world’s largest data centers. NVIDIA is committing up to $100 billion to the effort, with the first facilities expected to begin operating in the second half of 2026. Sam Altman, CEO of OpenAI, stated that “building this infrastructure is critical to everything we want to do, this is the fuel that we need to drive improvement, drive better models, drive revenue, drive everything,” a statement emblematic of the industry’s accelerationist bias.

The partnership aims to ease access to the massive computing power needed to train advanced models, one of the biggest constraints in AI today. By substantially expanding its hardware base, OpenAI hopes to push forward capabilities like advanced reasoning, multimodal processing that blends text and images, and systems capable of sustaining deeper interactions and handling extensive documents. Both companies argue that this will lower the cost of delivering intelligence while accelerating the transition from experimental research to broad commercial use.

But at what cost?

A Different Set Of Priorities For AI

At the opening of the U.N. General Assembly, Nobel laureate Maria Ressa stood before world leaders with a petition signed by more than 200 prominent figures, including former heads of state, scientists and technologists, urging the creation of binding global “red lines” for artificial intelligence. Backed by over 70 organizations, the initiative calls for an enforceable international agreement by 2026 to ban certain high-risk applications of AI, from deepfake impersonations and self-replicating systems to mass surveillance and autonomous weapons.

MORE FOR YOU

Their message is clear: without global rules, AI’s most dangerous uses could spiral beyond any single nation’s control. The letter highlights that “AI could soon far surpass human capabilities and escalate risks such as engineered pandemics, widespread disinformation, large-scale manipulation of individuals including children, national and international security concerns, mass unemployment, and systematic human rights violations.”

“For thousands of years, humans have learned—sometimes the hard way—that powerful technologies can have dangerous as well as beneficial consequences. With AI, we may not get a chance to learn from our mistakes, because AI is the first technology that can make decisions by itself, invent new ideas by itself, and escape our control. Humans must agree on clear red lines for AI before the technology reshapes society beyond our understanding and destroys the foundations of our humanity,” said Yuval Noah Harari, historian, philosopher and the 2014 bestselling author of ‘Sapiens: A Brief History of Humankind’. Stuart Russell, another signatory of the red lines letter and distinguished professor of computer science at the University of California, Berkeley captured the concerns animating the initiative: “The development of highly capable AI could be the most significant event in human history. It is imperative that world powers act decisively to ensure it is not the last.”

Two Views Of AI At Odds

The industry announcement reflects AI’s rapid acceleration and the race to push its capabilities further; the call at the United Nations highlights the parallel urgency to define guardrails before those capabilities run unchecked. Together, the two capture the dual reality of AI in 2025, staggering investment and innovation on one side, and mounting global pressure for rules and restraint on the other.

The temptation is to view these announcements as opposite poles: industry bent on acceleration and policymakers calling for restraint. But framing it as a binary, pause or accelerate, misses the real challenge. As I wrote in March 2023 in ‘Do Not Pause AI, Accelerate Ethics’, this framing is a false dichotomy. The world does not face a choice between unleashing AI’s potential and safeguarding humanity from its risks. We must do both in tandem.

Incentives Are The Answer For A Safe AI Future

The key lies in incentives. Industry will continue to race ahead if the rewards for speed outweigh the costs of failure. Policy, market structures, and public trust mechanisms must realign those incentives so that companies compete not only on the scale of their models but also on their safety, transparency, and accountability. If directed wisely, the same competitive forces that drive innovation can just as powerfully drive responsibility.

AI has the potential to expand productivity, accelerate discovery, and solve problems once thought intractable. But it also threatens to spread disinformation, deepen inequality, and destabilize societies. The choice is not between acceleration and pause. It is whether we can align incentives so that speed and safety move together, ensuring AI’s benefits outweigh its risks.

Editorial StandardsReprints & Permissions