California Governor Gavin Newsom signed the Transparency in Frontier Artificial Intelligence Act, otherwise known as SB53, into law Monday afternoon.

The bill is the first of its kind in the United States, placing new AI-specific regulations on the top players in the industry, requiring these companies to fulfill transparency requirements and report AI-related safety incidents.

Though several states have recently passed laws regulating aspects of AI, SB 53 is the first to explicitly focus on the safety of cutting-edge and powerful AI models.

In a statement, Newsom said that “California has proven that we can establish regulations to protect our communities while also ensuring that the growing AI industry continues to thrive. This legislation strikes that balance.”

The law requires leading AI companies to publish public documents detailing how they are following best practices to create safe AI systems. The law creates a new pathway for companies to report severe AI-related incidents to California’s Office of Emergency Services, while also strengthening protections for whistleblowers who raise concerns about health and safety risks.

The law is backed by civil penalties for non-compliance, to be enforced by the California Attorney General’s office.

While SB 53 attracted intense criticism from industry groups like the Chamber of Progress and the Consumer Technology Association, leading AI company Anthropic endorsed the bill while others like Meta said it was a “step in the right direction.”

While these companies expressed their support for the bill, they made clear that their preference was for federal legislation to avoid a patchwork of state-by-state laws.

In a LinkedIn statement several weeks ago, OpenAI’s chief global affairs officer Chris Lehane wrote, “America leads best with clear, nationwide rules, not a patchwork of state or local regulations. Fragmented state‑by‑state approaches create friction, duplication, and missed opportunities.”

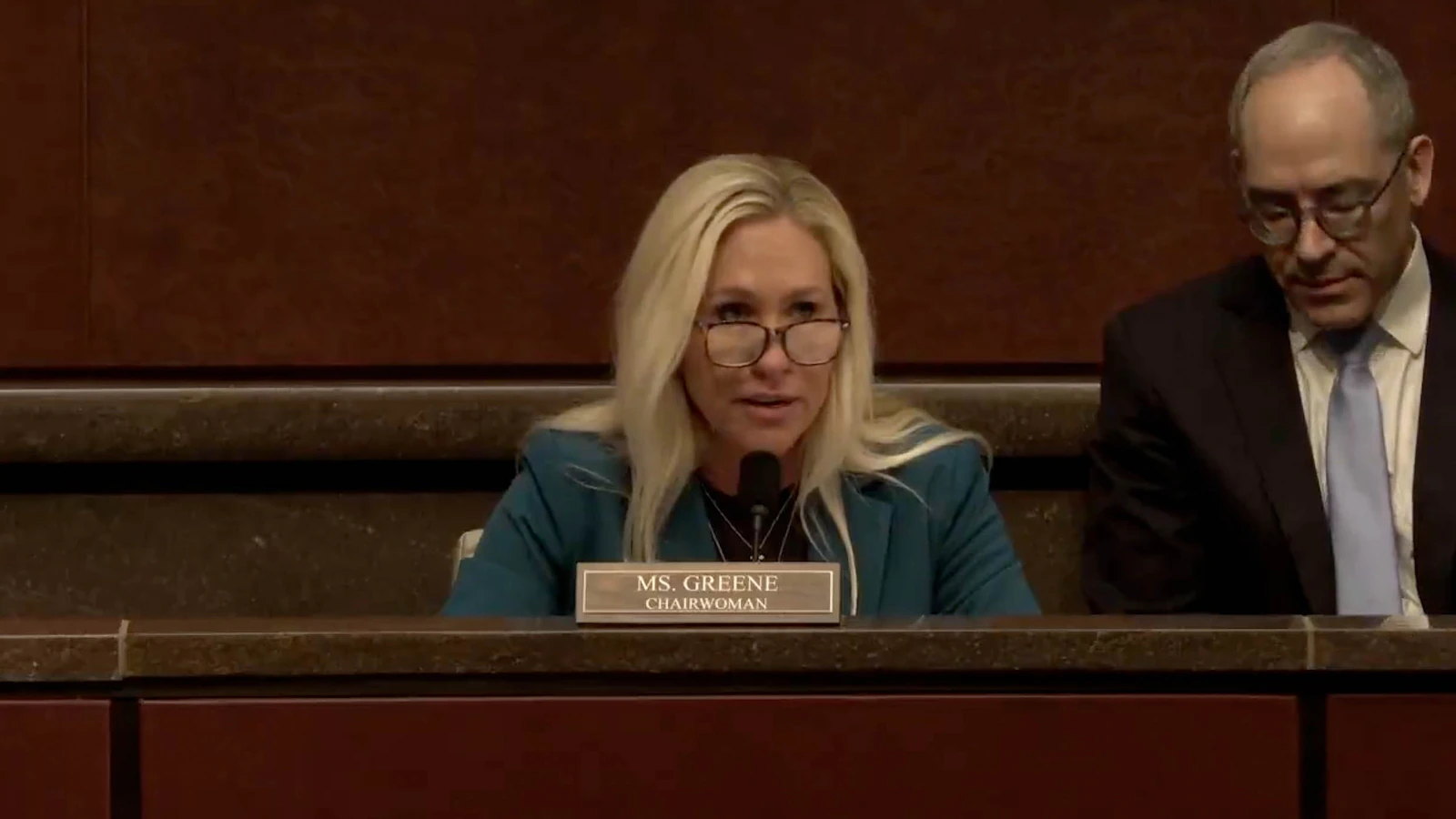

Monday morning, U.S. Senators Josh Hawley and Richard Blumenthal proposed a new federal bill that would require leading AI developers to “evaluate advanced AI systems and collect data on the likelihood of adverse AI incidents.”

As currently written, the federal bill would create an Advanced Artificial Intelligence Evaluation Program within the Department of Energy. Participation in the evaluation program would be mandatory, much like SB53’s mandatory transparency and reporting requirements.

The passage of SB53 and the introduction of the federal bill from Sen. Hawley and Sen. Blumenthal come as world leaders increasingly call for AI regulation in the face of growing risks from advanced AI systems.

In remarks to the United Nations General Assembly last week, President Donald Trump said, AI “could be one of the great things ever, but it also can be dangerous, but it can be put to tremendous use and tremendous good.”

Addressing the U.N. one day after President Trump, President Vladimir Zelensky of Ukraine said, “We are now living through the most destructive arms race in human history because this time, it includes artificial intelligence.”