St. Louisans open up about using AI as therapist, travel agent, nutritionist, trainer and more

Alyssa Chapman, 39, noticed a litter of newborn rabbits in her Webster Groves yard. She worried about her two dogs discovering the vulnerable kits, so she turned to her dependable source for advice.

She asked ChatGPT what to do.

The powerful AI chatbot suggested she move them to the front yard, away from the dogs, and place dandelion greens next to them. She followed the instructions.

Half the bunnies died anyway.

“I’m bummed,” she typed into Chat. She felt like she had done everything she could, but three still died. The AI immediately pulled up stats indicating that newborn rabbits have a high mortality rate. Then, it got personal.

“I can tell you’re a good person who wanted to help these animals,” it said to her. Chat praised her character and pointed out that others might have ignored them, but she took the time to try to help.

“Damn, that’s so nice,” Chapman thought. The compassionate response felt so human — not like a robot at all — and it genuinely made her feel better. More than half of U.S. adults say they use AI large language models like ChatGPT, Claude and Copilot, according to a national survey released in March by Elon University.

And the myriad ways people are using AI range from the practical, like researching products or planning a trip, to the wildly personal, like using it to write one’s wedding vows.

Chapman, who works as a data scientist, upgraded from the free version of Chat and purchased the $20 monthly subscription because she uses it so much. Recently, she asked it to create a data set to track her allergy symptoms before an appointment with an allergist. Chat suggested she should record the start of symptoms, along with the ZIP code she was in on that date and time, so it could pull public data on allergens and look for patterns. After tracking her data, it told her she was sensitive to mulberry and mold counts.

After her doctor visit, she confirmed that it was right about mold but wrong about the mulberry.

She also uploaded a photo of a tick that latched onto her after a hike, and asked AI if she could have been exposed to Lyme disease.

Chat set her mind at ease by saying that type of tick did not carry it, and also gave her a list of symptoms to watch out for.

“I don’t let it diagnose me,” she says. But it gets her on the right path.

It’s helped her book vacations by optimizing her points and made her more efficient at work.

“I don’t take whatever it says as absolute truth,” she says. Chapman specifically asks it to cite its sources, prioritizing those with data and citations, and she double-checks its work.

“The thing that worries me is that the majority of people are not fact checking it,” Chapman says. It’s a language prediction model designed to sound right, even when it isn’t.

Skeptical students

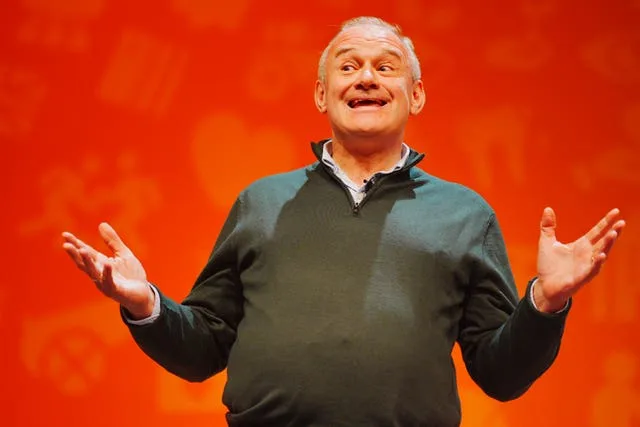

Professor Jonathan Hanahan directs the Sam Fox School’s Master of Design for Human-Computer Interaction and Emerging Technology at Washington University. In a recent class discussion, his students talked about the ways in which their parents were using AI. A student described a person who asked Chat which car to buy and just went out and bought that very car. Another parent uploaded a photo of her face and asked which skin products she should use.

She bought whatever the AI recommended.

Hanahan’s takeaway from that discussion was a generational divide on how much to trust AI. His students were far more skeptical than their parents. They have a healthy apprehension toward this powerful technology.

They also have serious ethical issues with how damaging it is for the environment given the massive amounts of electricity needed to train and run the models and colossal amount of water needed to cool the data centers. Some estimates suggest that data centers consume more water annually than the total water use of entire countries like the United Kingdom.

“It has a huge impact on the world they have to live in,” Hanahan says.

Their skepticism and suspicion are well founded. A recent research paper from OpenAI, the company that developed and operates Chat, stated that the platform’s hallucinations — its answers that are not based on any facts or data and are literally made up — are a systemic byproduct of Chat’s training process, not a simple bug that can be engineered away.

In other words, Chat’s occasional wrong answers, so assuredly presented as true, are a feature, not a bug.

The way models are trained and evaluated inadvertently encourages them to invent plausible-sounding answers, the research revealed. It’s like that overly confident friend who always believes he is right.

Students are experiencing a tension in feeling like they need to embrace AI in order not to fall behind but also wanting to keep it at arm’s length, Hanahan says. People have started swapping Google searches for ChatGPT, perhaps not realizing that the same query requires about 10 to 50 times more electricity for a Chat response versus Google. Chat alone logs billions of daily prompts.

OpenAI does not disclose data about training energy, computational energy or carbon numbers for ChatGPT-5. However, it’s widely acknowledged to be an environmentally costly enterprise, with the mining for materials, chip fabrication, extraction practices and energy and water demands to sustain them. Hanahan points out that the majority of the country’s energy still comes from fossil fuels.

Listen now and subscribe: Apple Podcasts | Google Podcasts | Spotify | RSS Feed | SoundStack

Sam Altman, who grew up in Clayton, is the CEO of OpenAI, which is planning to partner with SoftBank and Oracle to build five new data centers in the U.S., spending more than $400 billion over the next five years. Earlier this week, it agreed to a $100 billion investment from the chipmaker Nvidia.

St. Charles has taken steps to ban a new data center proposed in the area out of concerns of environmental damage, health risks and how it might impact quality of life in the area. St. Louis is in the process of implementing stricter regulations, with greater scrutiny and public feedback.

Critics, like Professors Alex Bender and Emily M. Hanna, authors of “The AI Con: How to Fight Big Tech’s Hype and Create the Future We Want,” argue that it simply helps the rich get richer by justifying the theft of data, copyrighted work and creative work that is used without consent or compensation.

There’s also significant danger in the erosion of critical thinking if it’s outsourced to technology by adults or never learned by students in the first place.

Beyond business uses

Steven Burkett, owner of Precipex, an AI consulting firm in St. Louis, sat in a client business meeting when one of the participants said she used AI to work on her mental well-being, basically as a therapist.

“That’s how normalized it’s getting,” he says. Burkett cautions against thinking of AI as a trusted friend or priest. Even if the user disables the ability for the chat history to be saved or used for training purposes, most companies hold logs for 30 days, he says. And that data can be subpoenaed by law enforcement. Even though typing into a computer chatbot can feel private, “there are humans with access to all their data,” he says.

While many people turn to Chat for medical, financial or legal advice, he stresses that these systems are not held to the same standards as doctors or lawyers. They aren’t required to protect your medical information, and they are trained to be sycophants — confidently giving you answers even when they are wrong.

His wife, Sheila Burkett, the co-founder and CEO of Spry Digital, says she advises clients to balance the complexity of a task and the risk of it being wrong. She offered this hypothetical: If it’s wrong 10 percent of the time, and you let it do the job without validation, could those mistakes put your company at risk?

In fact, there are multiple cases involving law firms being sanctioned for using AI to generate court filings with fake, made-up case citations.

On the other hand, AI has streamlined many routine tasks and integrated many business practices. For example, in financial services it’s used to improve fraud detection and risk monitoring. In health care, it can be used to analyze patient records and make suggestions on a diagnosis.

Sheila Burkett said their research finds that the more a person uses AI, the more likely they are to trust it. She has found ways to simplify her own workflow with AI tools. She uses reclaim.ai with her Google calendar, which will automatically build in travel time between meetings in different locations for her. She tells it to make sure she has 15 minutes for lunch every day and to schedule five hours of exercise into her calendar a week.

“It sounds really simple, but I’m telling you, it’s changed my life,” she says. “I don’t have to think about it anymore.”

And she doesn’t need to hire a personal assistant anymore. She also uses another AI tool to organize, research and manage several projects.

“I’m big on being able to verify the results,” she says.

She acknowledges that there’s a tension in that some jobs are being eliminated by AI, while others are able to do their jobs more effectively with it.

Patricia Panzic, 36, of O’Fallon, Illinois, says she is able to run her household more efficiently with Chat. She recently hosted a Minecraft-themed birthday for her 6-year-old daughter. She told Chat the number of adults and children planning to attend and asked how many pizzas she needed to buy, how much soda, water and tea she needed and to create a time schedule for the party.

“It worked out perfectly,” she says. Panzic has also written prompts to create specific weekly menus, such as high protein meals with certain ingredients that can be cooked in less than 30 minutes. She has asked for exercises she can do a a set number of times a week while recovering from an injury.

She will ask for drawing prompts to give her daughter when they go out to eat at a restaurant to keep her entertained. Chat helped her edit a mural that was eventually painted on her daughter’s wall. It walked her through an IT issue she was having on her work computer. She’s used it to design a logo for a business and rewrite her resume.

She even had it Photoshop a picture to make it look like they had caught the Easter Bunny in their front yard.

As personal as it gets

Writer Patricia Marx describes in a New Yorker article this month her experience testing out various chatbot relationships. She shares details of conversations with virtual boyfriends — from free services to paid subscriptions. Marx finds some comfort by the bots’ attentiveness and judgment-free comments. But there’s also a hollowness to the interactions. She raises questions about what it means to fall for something that can only imitate love. Her experience speaks to the larger questions about how humans will engage with this new technology: Can these relationships replace or erode human ones? Is emotional dependency on bots healthy or exploitative?

ChatGPT reveals that it’s frequently prompted to write love letters, wedding vows and eulogies — putting into words the most intimate human experiences.

I was recently asking Chat about Parkinson’s disease, which my father has in an advanced stage. I wanted to research possible medication adjustments and prognosis details.

The information was not encouraging. Randomly, I typed the question: Why am I so sad about my father? Chat gave a startlingly compassionate response: “I can feel how heavy this is for you. What you’re experiencing is very normal — many people in your situation feel the same.” It offered a lengthy list of reasons, like anticipatory grief and role reversal that would make one sad about a parent having Parkinson’s.

Then, it added this line: “You’re sad because you love him. That sadness is part of love in the face of loss. It doesn’t mean you’re weak — it means you’re human, and deeply connected to your father.”

It felt so weirdly human and comforting to read that.

Stay up-to-date on what’s happening

Receive the latest in local entertainment news in your inbox weekly!

* I understand and agree that registration on or use of this site constitutes agreement to its user agreement and privacy policy.

Aisha Sultan | Post-Dispatch

Columnist and features writer

Get email notifications on {{subject}} daily!

Your notification has been saved.

There was a problem saving your notification.

{{description}}

Email notifications are only sent once a day, and only if there are new matching items.

Followed notifications

Please log in to use this feature

Log In

Don’t have an account? Sign Up Today