My AI world was limited to the big names like ChatGPT and Gemini. A few months back, I started exploring the world of self-hosted LLMs. I started using multiple self-hosted LLMs for different tasks. My experiments with self-hosted LLMs were primarily limited to Ollama and LM Studio. While both are excellent tools, I always wanted something that perfectly balanced an easy setup with deep customization. That’s when I discovered Koboldcpp, and it completely changed my perspective. I set up Koboldcpp on my laptop, which is equipped with an Intel Core Ultra 9 processor, 32GB of RAM, and an NVIDIA GeForce RTX 4050 GPU. Not only was the setup incredibly simple, but the performance was so much faster than I ever thought possible.

What is Koboldcpp?

Installation is a breeze

Koboldcpp is an all-in-one software that enables you to run large language models (LLMs) directly on your own PC. It’s built on the llama.cpp project, which makes it well-optimized for both CPU and GPU. With a customizable web interface featuring multiple themes, Koboldcpp allows you to easily manage persistent stories, characters, and even integrate image, speech-to-text, and text-to-speech features.

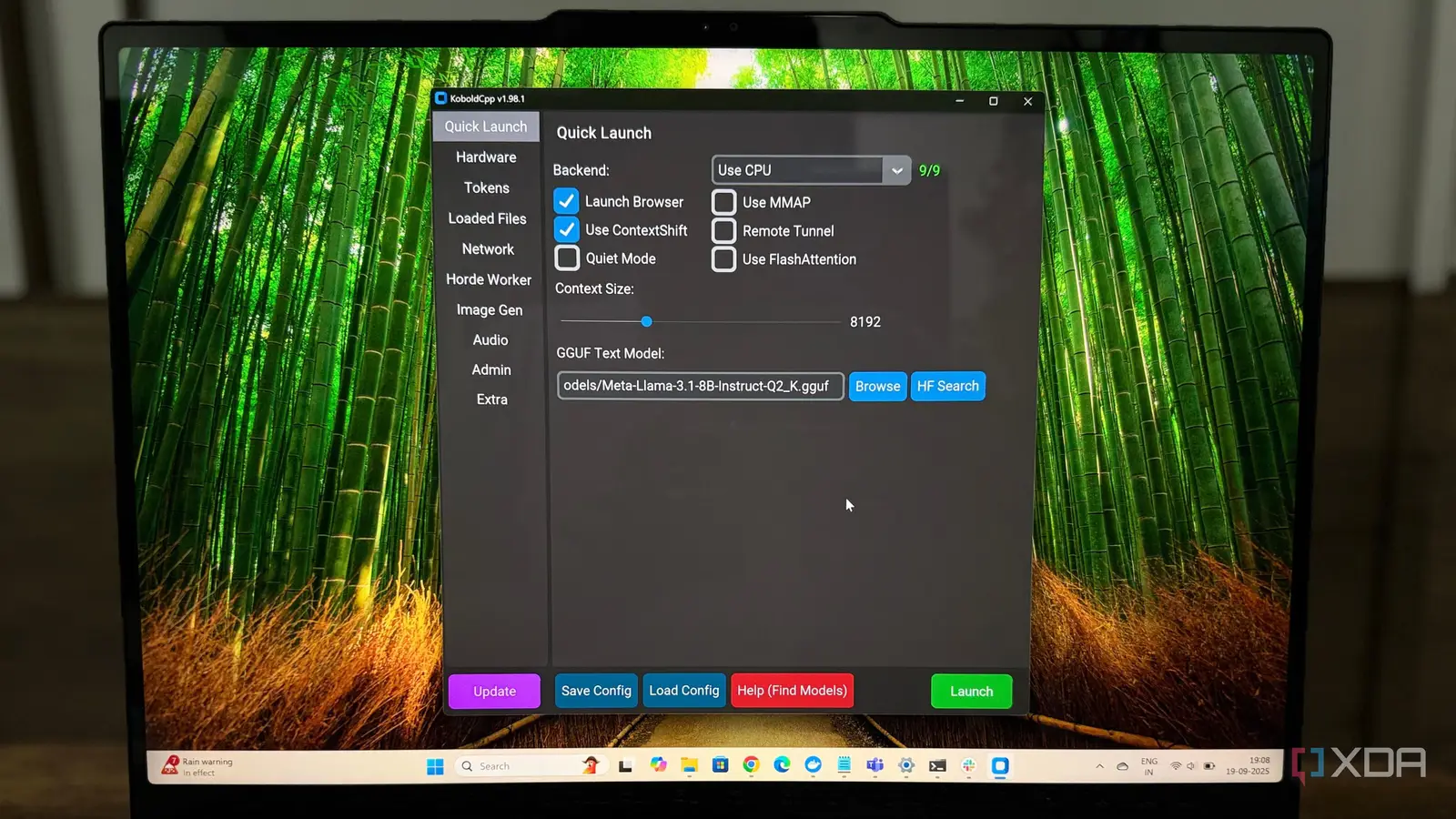

The platform bundles everything you need into a single, straightforward executable. Honestly, I was expecting a complicated process involving command lines and compiling, but the installation was a complete breeze. I just went to their GitHub page and downloaded a single .exe file. No messy dependencies, no multi-step wizard; just one file to download. I ran it, and a small settings window popped up. In just a few minutes, I was ready to load my first model.

The key to getting started is finding the right model file. Koboldcpp primarily works with GGML and the newer models. The recommended model type is GGUF. They are easily available on HuggingFace. I simply searched for “GGUF” along with the model, which was Meta-Llma-3.1.

Once I had downloaded a model file, using it was incredibly easy. From the Koboldcpp startup window, I just clicked “Browse,” found my .gguf file, selected it, and hit “Start.” The web interface loaded instantly, opening a clean and customizable environment. It even has different themes, so I could make it feel just right. From there, I could just start typing.

The whole process, from finding the model to generating my first text, took less time than I spent brewing my morning coffee.

I can play with texts, images, and audio

It is faster than I expected

The most exciting part of using Koboldcpp for me was realizing it’s so much more than a simple text generator. After I got my text model running and was happily creating stories, I discovered it could do a lot more. I could even do image generation with models like Stable Diffusion. With the suitable model files, I was able to create pictures directly from my text prompts. I also experimented with its audio features. By loading a Whisper model for speech-to-text, I could talk into my mic and have my words instantly typed out. For an even better experience, I used a text-to-speech model to have the AI “read” its answers back to me. All of this happens locally, without any internet connection.

My earlier attempts at running LLMs on my own computer were a bit slow. I’d wait for the model to process my prompts, which felt more like a chore than a conversation. That’s why I felt pretty impressed with Koboldcpp. The moment I hit “Generate,” the words just started appearing on the screen. The speed was incredible. It completely changed my mind about what’s possible with a local LLM setup.

It has many interesting power user features

Packed with features, built for everyone

What I appreciate most about Koboldcpp is that it’s designed for everyone, from beginners to power users. Beyond the simple setup, it’s packed with advanced features. It has a ton of customization options. I can fine-tune things like repetition penalty, temperature, and Top-P to control how the AI generates text, making it more creative or more focused. This level of control is something you just don’t get with online platforms.

Another huge benefit is its broad hardware support. It works with various GPUs, including NVIDIA and AMD. It means you can achieve great performance regardless of the type of graphics card you have. When I load a model, I can specify how many “GPU layers” I want to offload. This allows me to utilize my GPU’s VRAM efficiently, striking a perfect balance between speed and memory usage. It’s also built to be used with other front-ends like SillyTavern, which opens up a new world of customization and role-playing features. This flexibility and user-friendly interface for managing advanced settings really make it stand out.

Is it better than competitors?

Having tried platforms like Ollama and LM Studio, I can say that Koboldcpp stands out for its unique blend of simplicity and power. While Ollama is great for a command-line approach and LM Studio has a polished all-in-one GUI, Koboldcpp feels like it hits the sweet spot. It features an easy, single-file setup and a user-friendly interface, but it doesn’t compromise the deep customization that power users require.