AMD & OpenAI Partner Up To Deploy 6 Gigawatts of AMD Instinct GPUs For AI, Kicking off With 1GW of Next-Gen MI450 Chips In 2H 2026

AMD & OpenAI have announced a strategic partnership which involves the deployment of 6 Gigawatts of GPUs, including next-gen MI450s, starting 2H 2026.

OpenAI To Power Its Massive 6 Gigawatt AI Infrastructure With AMD’s Instinct GPUs Starting With 1GW of Next-Gen MI450s

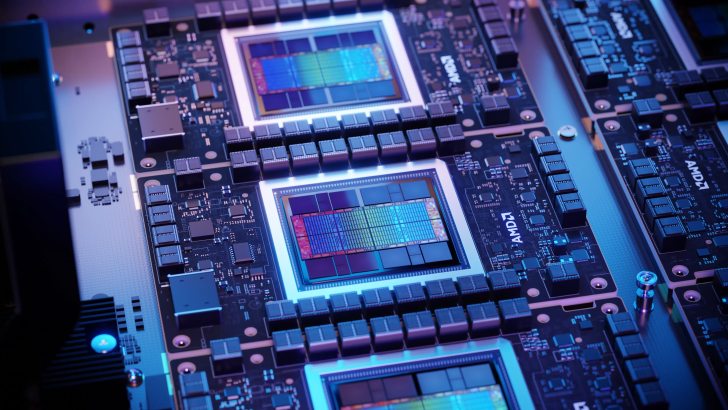

The announcement is a massive one for AMD as OpenAI, a leader in AI infrastructure, will utilize its latest Instinct accelerators to power up to 6 Gigawatts of AI capabilities. The announcement also gives us a time frame for the next-gen MI400 series accelerators, such as the MI450, which will pack up to 40 PFLOPs of AI compute, 432 GB of HBM4 memory, and 19.6 TB/s of bandwidth. The first deployment at OpenAI will be using these specific GPUs in 2H 2026. But the entire infrastructure will also house other Instinct AI series accelerators such as the MI300 and MI350 series.

Press Release: AMD and OpenAI today announced a 6 gigawatt agreement to power OpenAI’s next-generation AI infrastructure across multiple generations of AMD Instinct GPUs. The first 1-gigawatt deployment of AMD Instinct MI450 GPUs is scheduled to begin in the second half of 2026.

AMD’s strong leadership in high-performance computing systems and OpenAI’s pioneering research and advancements in generative AI place the two companies at the forefront of this important and pivotal time for AI.

Under this definitive agreement, OpenAI will work with AMD as a core strategic compute partner to drive large-scale deployments of AMD technology, starting with the AMD Instinct MI450 series and rack-scale AI solutions and extending to future generations. By sharing technical expertise to optimize their product roadmaps, AMD and OpenAI are deepening their multi-generational hardware and software collaboration that began with the MI300X and continued with the MI350X series. This partnership creates a true win-win for both companies, enabling very large-scale AI deployments and advancing the entire ecosystem.

As part of the agreement, to further align strategic interests, AMD has issued OpenAI a warrant for up to 160 million shares of AMD common stock, structured to vest as specific milestones are achieved. The first tranche vests with the initial 1 gigawatt deployment, with additional tranches vesting as purchases scale up to 6 gigawatts. Vesting is further tied to AMD achieving certain share-price targets and to OpenAI achieving the technical and commercial milestones required to enable AMD deployments at scale.

“We are thrilled to partner with OpenAI to deliver AI compute at massive scale,” said Dr. Lisa Su, chair and CEO, AMD. “This partnership brings the best of AMD and OpenAI together to create a true win-win, enabling the world’s most ambitious AI buildout and advancing the entire AI ecosystem.”

“This partnership is a major step in building the compute capacity needed to realize AI’s full potential,” said Sam Altman, co-founder and CEO of OpenAI. “AMD’s leadership in high-performance chips will enable us to accelerate progress and bring the benefits of advanced AI to everyone faster.”

“Building the future of AI requires deep collaboration across every layer of the stack,” said Greg Brockman, co-founder and president of OpenAI. “Working alongside AMD will allow us to scale to deliver AI tools that benefit people everywhere.”

“Our partnership with OpenAI is expected to deliver tens of billions of dollars in revenue for AMD while accelerating OpenAI’s AI infrastructure buildout,” said Jean Hu, EVP, CFO, and treasurer, AMD. “This agreement creates significant strategic alignment and shareholder value for both AMD and OpenAI and is expected to be highly accretive to AMD’s non-GAAP earnings-per-share.”

Through this partnership, AMD and OpenAI are building the infrastructure to meet the world’s growing AI demands by combining world-class innovation and execution to accelerate the future of high-performance and AI computing.