After raising $750 million in new funding, Groq Inc. is carving out a space for itself in the artificial intelligence inference ecosystem.

Groq started out developing AI inference chips and has expanded on that with a system of software called GroqCloud. The company now exists in two markets: working with developers and innovators to power applications with AI and managing sovereign AI for international clients.

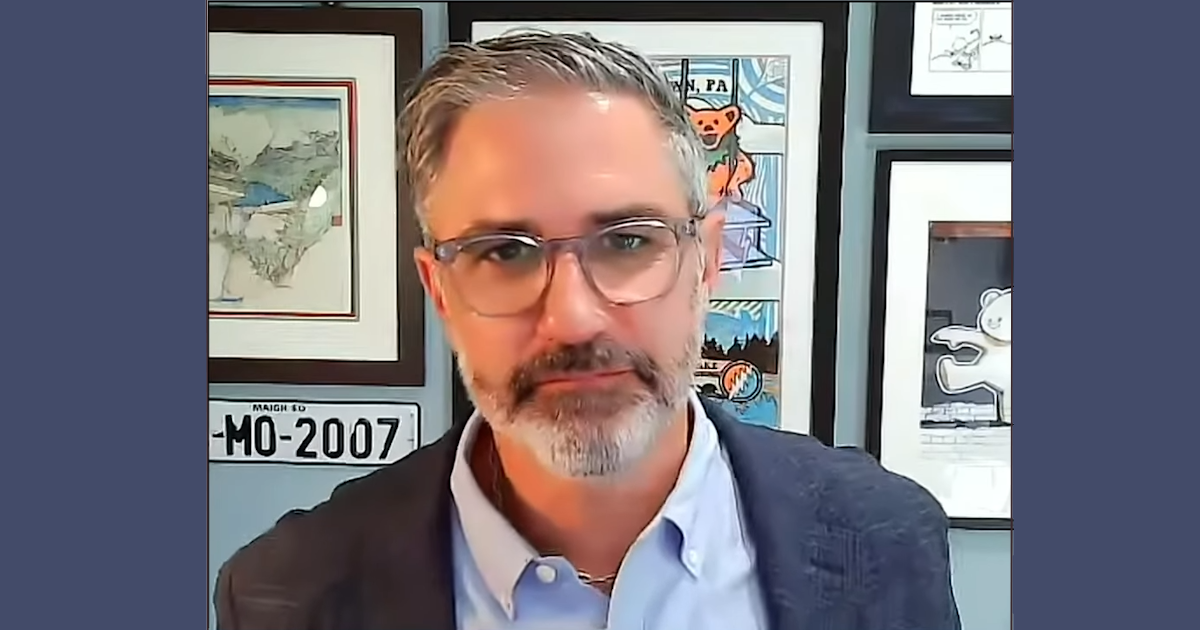

“There’s all these native services that customers are asking for that we’re starting to put into Groq,” said Chris Stephens (pictured), vice president and field chief technology officer of Groq. “We just launched native MCP service, bringing more and more capabilities into the inference stack so that the thing that customers are getting out of the box is a layer that’s solving a whole bunch of those infrastructure problems.”

Stephens spoke with theCUBE’s John Furrier at theCUBE + NYSE Wired: AI Factories – Data Centers of the Future event, during an exclusive broadcast on theCUBE, SiliconANGLE Media’s livestreaming studio. They discussed Groq’s system of inference and how the enterprise AI market is developing.

Groq’s position in the AI market

Groq, which recently launched projects in the Middle East and Europe, describes implementing its system as almost like drop-shipping an AI inference compute center. The company is planning to build on its work with Bell Canada, where it managed a sovereign AI network across six sites and is getting interest from other national telecommunication companies, according to Stephens.

“The large government entities, large scale enterprises across Canada already trust Bell with their networking,” he said. “It’s a natural extension to add to that inference compute and … Groq naturally as a part of that. We’re seeing the same thing play out with other similar telcos in conversations we’re having in other parts of the world.”

The AI market is evolving rapidly. Stephens foresees competition between incumbent enterprise applications such as SAP SE and AI-native startups who want to disrupt the current software hierarchy. Whichever side the market lands on, Groq has put itself squarely in the inference space, which has been seeing a number of use cases in workflow automation and customer service.

“Most organizations, governments, large entities, startups, innovators, all in between are not likely to be doing training,” he said. “They’re going to be doing inference. Every single time a customer’s interacting with your application powered by gen AI, that’s inference. We all know that and we’ve seen a solidification of that as a market over the past year and a half.”

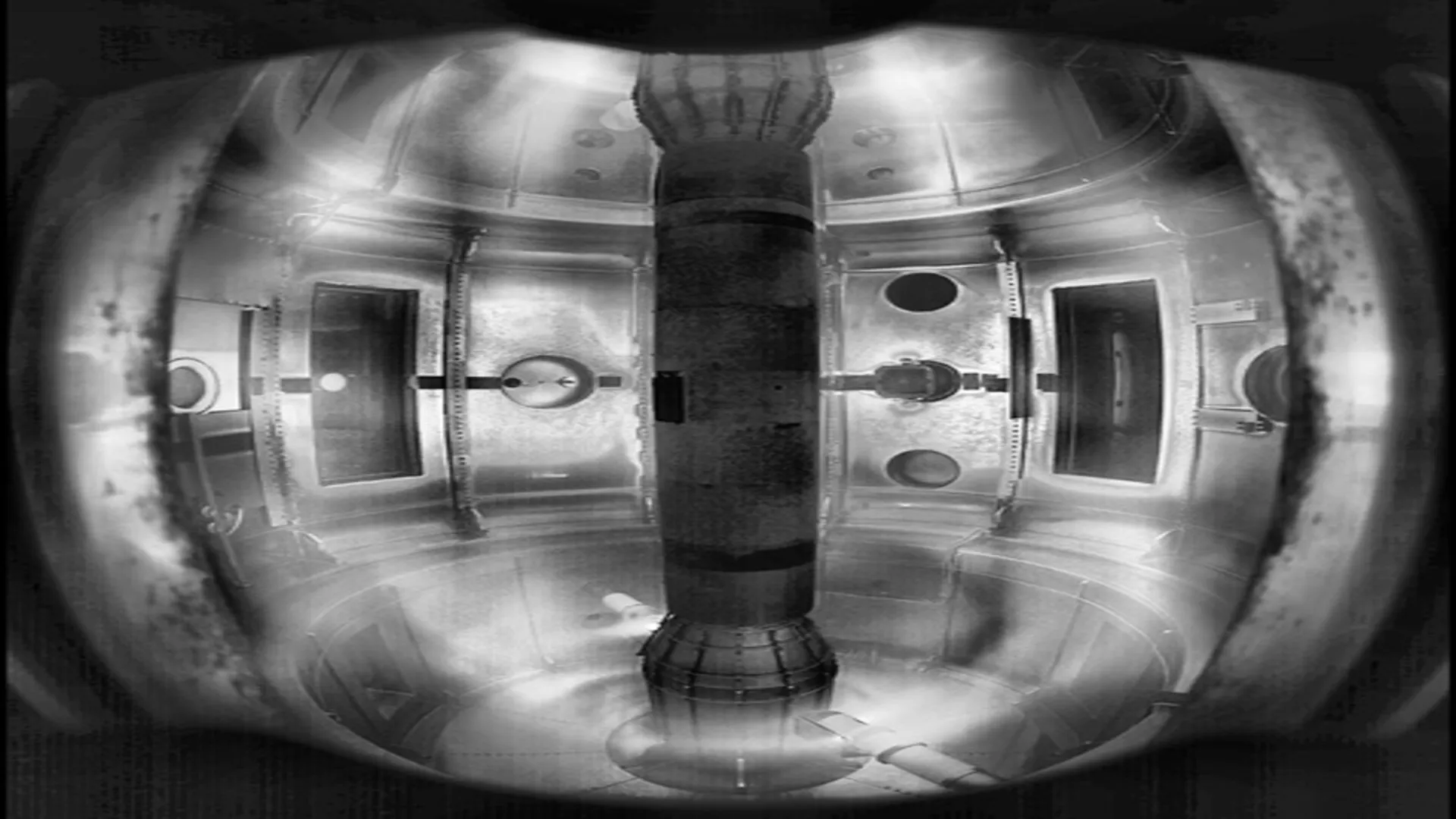

Stephens sees Groq’s hardware layer as a significant advantage in the current market, enabling the company to run more data centers while using less energy than its competitors. Even as Groq continues to innovate on hardware, what the company really offers is an entire system of inference, he emphasized.

“What you’re consuming is the system,” Stephens added. “The system is the management of the chips, the optimization of all these different model architectures, be they transformers, be they diffusion, you name it, onto that system of chips through our compiler and then accessing that in a way that your engineering teams are familiar with.”

Here’s the complete video interview, part of SiliconANGLE’s and theCUBE’s coverage of theCUBE + NYSE Wired: AI Factories – Data Centers of the Future event:

Photo: SiliconANGLE