I usually try to self-host as many tools as possible, but until recently, I didn’t see the point in doing so for an AI tool. The common reasons for self-hosting, such as privacy, security, control over your data, and air-gapped networks, are all valid, especially for enterprise or information security (infosec) use. Outside of those cases, though, it is harder to see the practical benefits of running AI tools locally. I decided to try it anyway by self-hosting an AI-powered research assistant called Maestro, and I quickly understood why it matters.

What makes Maestro different is not just that it is open source or self-hosted. It is built to handle the entire research workflow, from collecting and organizing source material to producing detailed, citation-rich reports.

Getting Maestro up and running

Takes some effort, but it’s worth it

I didn’t want to host Maestro on my daily driver, so I pulled out an old Windows laptop that barely met the minimum requirements. Maestro runs in Docker and requires at least 16GB of RAM and 30GB of storage; however, I strongly recommend using 32GB or more and a dedicated GPU if you plan to utilize local LLMs. You will also need API keys for at least one AI provider, such as OpenAI or Anthropic, if you want to mix local and cloud models.

Once it is running, however, Maestro is remarkably stable. The backend is built on FastAPI with asynchronous processing, and the frontend is a clean React interface served through Nginx. I have had it running continuously for some time with no major issues, and the performance has been more than sufficient.

Installing Maestro is straightforward. First, clone the repository and navigate into it:

git clone https://github.com/murtaza-nasir/maestro.git

cd maestro

Next, configure the environment. Maestro provides two options, with the interactive setup recommended. Running ./setup-env.sh guides you through setting up API keys for AI providers, configuring search providers, setting network parameters, and choosing deployment options. Once the environment is ready, start Maestro with Docker Compose:

docker compose up

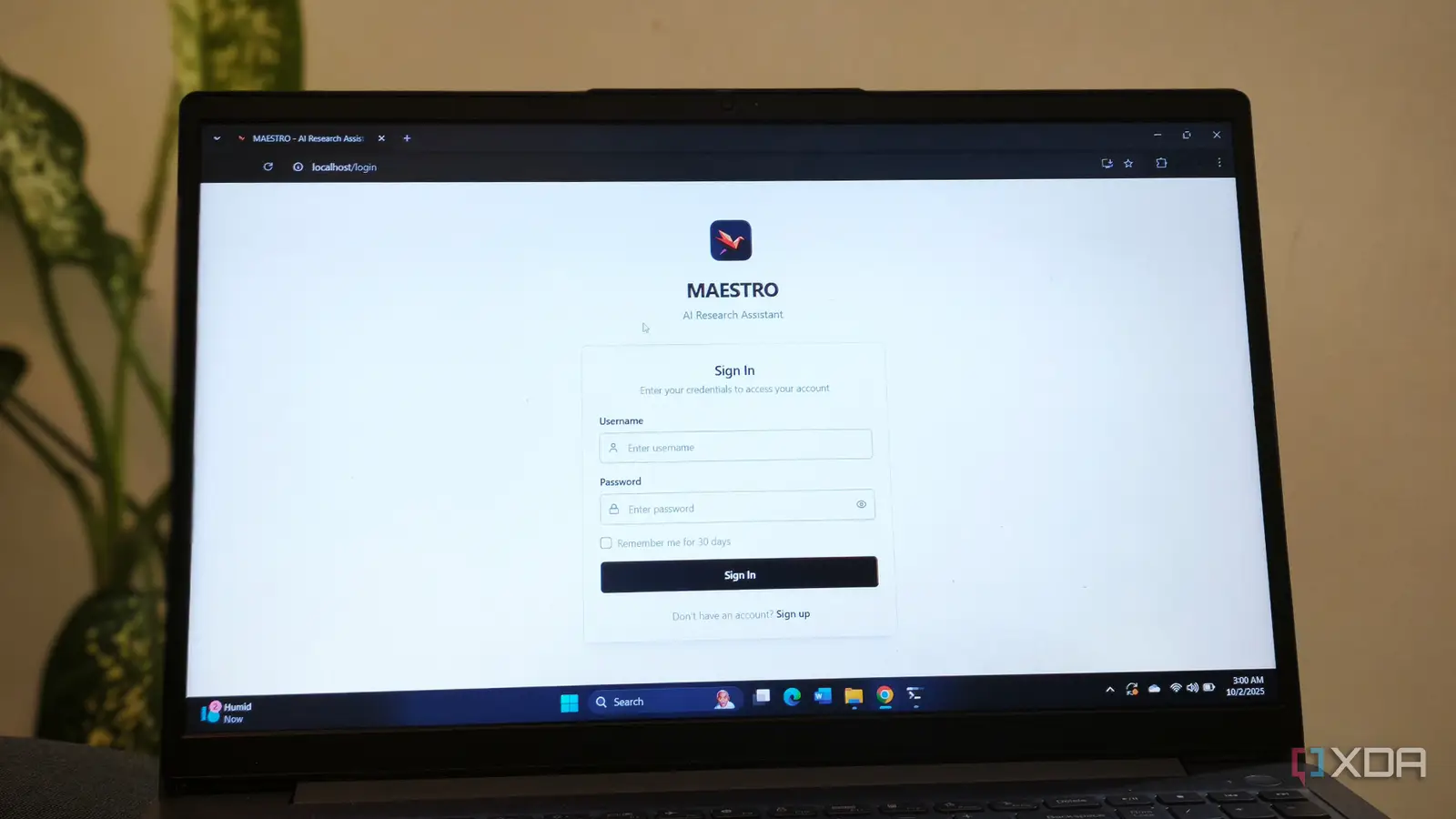

This command downloads the necessary Docker images, builds the containers, initializes the PostgreSQL database, and starts all services. After that, you can access Maestro through the web interface at http://localhost:3030 or view the API documentation at http://localhost:8001/docs.

Using Maestro has been a treat

It has all the features you’d need for research

Most AI tools are built around single interactions. You paste some text, ask a question, and get a response. Maestro approaches the problem differently. It treats research as an iterative, multistep process involving planning, investigation, synthesis, and writing. Instead of a single chatbot, it uses a multi-agent system, each focused on a different part of the workflow.

The process starts with defining a research mission. You describe the problem or topic, outline the depth of analysis you want, and point the system toward any existing documents you would like it to reference. From there, Maestro takes over. Agents plan out a research strategy, search your document library and the web, reflect on findings, critique each other’s work, and iteratively refine the output until it is ready to deliver a structured, cited report.

One of Maestro’s strongest features is its document management and retrieval system. You can upload PDFs, Word files, and Markdown documents into a central library, organize them into groups, and let the platform index them using a robust RAG (Retrieval-Augmented Generation) pipeline powered by BGE-M3 embeddings.

Maestro also integrates with search providers like Tavily, LinkUp, and Jina to fetch and parse online content automatically, including JavaScript-heavy pages that most scrapers struggle with. Once the research phase is done, you can use the collaborative writing assistant to prepare a structured, well-cited document. Because it has access to your research history, document library, and all the sources it gathered along the way, the writing suggestions it generates are consistently grounded and relevant.

The built-in editor supports real-time collaboration, LaTeX for technical writing, and reference management tools to keep citations organized and up-to-date. I have used it to draft internal white papers, and the difference in quality compared to writing with a generic LLM is significant. Instead of receiving generic summaries, I receive nuanced, source-backed arguments and context-aware phrasing that reflect the actual research conducted in the background.

Maestro is not for everyone, though. If your goal is to ask a few questions and get quick answers, you are better off sticking with a hosted chatbot. The tool demonstrates its true value when dealing with complex, ongoing research that requires synthesizing large volumes of data, maintaining context across sessions, and producing structured outputs.

You can just self-host things