AI Sparks a ‘DRAM Supercycle’ After Years of Stagnation, Driven by Massive HBM Adoption as Big Tech Rushes to Build Custom ASICs

AI has managed to disrupt several elements of the supply chain, as now, according to multiple reports, the demand for DRAM is expected to ‘skyrocket’ moving ahead.

DRAM Production Numbers Reportedly Won’t Fulfill The Demand Coming Ahead, Driven By The AI Industry

For those unaware, the AI industry is in dire need of DRAM production scaling up, as every AI cluster requires HBM capacity per chip, which in turn generates massive demand for DRAM. More importantly, AI giants are pursuing the development of custom ASICs to deploy into internal systems, which has significantly contributed to the DRAM demand estimates. Given that NVIDIA is also facing huge demand for its AI products, DRAM has now become as essential as chip nodes. Now, according to Chosun Biz and analyst notes from UBS (via Jukan), DRAM demand is going to rise exponentially within the upcoming years.

Let’s first talk about what UBS says here. It is claimed that OpenAI’s upcoming ASIC will feature the 12-Hi HBM3E technology, and that alone could generate 500K-600K DRAM WPM capacity from 2026 to 2029, which accounts for a massive chunk of the total DRAM production. This demand is generated by a single ASIC competitor, indicating that the potential for DRAM companies is massive, especially given the dire need to scale up capacities. DRAM industry is forecasted to reach 1.955 million WPM by 2026, but this won’t be enough.

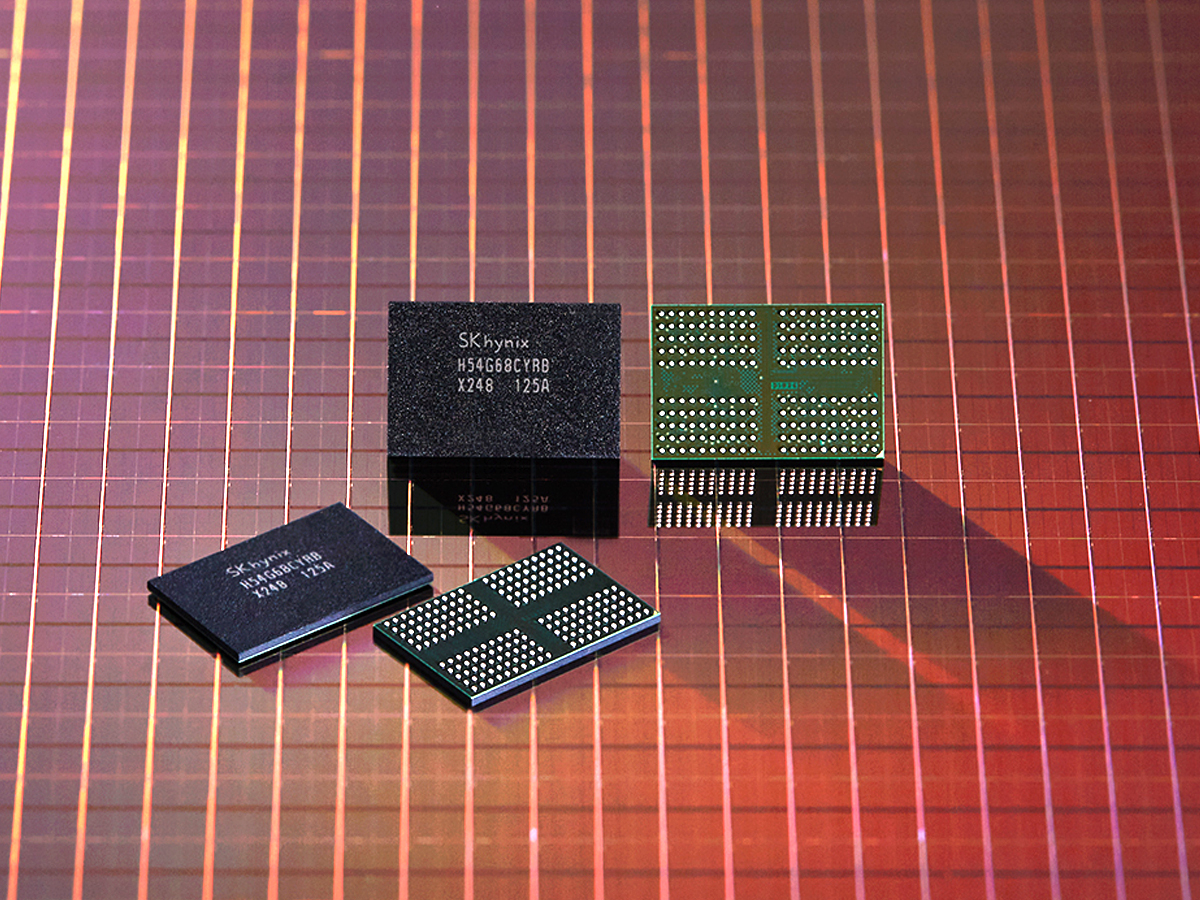

TrendForce reports that the ‘global DRAM supplier’ inventory currently stands at just 3.3 weeks, which is the lowest in seven years, since this figure usually lies around 10 weeks. Mainstream firms responsible for DRAM production, such as Samsung, SK hynix, and Micron, have shifted their focus towards HBM by converting existing production lines and scaling up process technology, which is currently at a 1c node. It’s essential to note that DRAM utilization is not limited to custom AI chips, as it plays a significant role in data centers, which we’ll discuss next.

OpenAI’s Stargate project alone is expected to consume a significant portion of the global DRAM supply, as it is claimed to utilize 900,000 DRAM WPM, which accounts for at least 40% of the total supply at current levels. This is unprecedented demand, and with DRAM production mainly concentrated among Korean manufacturers, it would be interesting to see how firms manage to meet the anticipated demand. Companies like Micron and SK hynix are planning to diversify their production by investing in the US, but can they establish facilities within the upcoming years?

The DRAM industry would be interesting to watch moving forward, especially since technologies like HBM4 would further scale up the production required. Big Tech craves HBM right now, and the only way to move forward is by increasing the supply.