By Jack Wallen

Copyright zdnet

Follow ZDNET: Add us as a preferred source on Google.

ZDNET key takeaways

AI use continues growing and impacting everything.Using cloud-hosted AI has several drawbacks.Locally installed AI is easy to use and free.

AI isn’t going anywhere, and everyone knows that by now. People around the world are using AI for just about any reason or task that you can imagine. I know people who consider the AI chatbots to be friends. I also know people who look at AI as a tool for research. And then there are those who use AI to write correspondence and other types of documents.

Also: What is an AI PC exactly? And should you buy one in 2025?

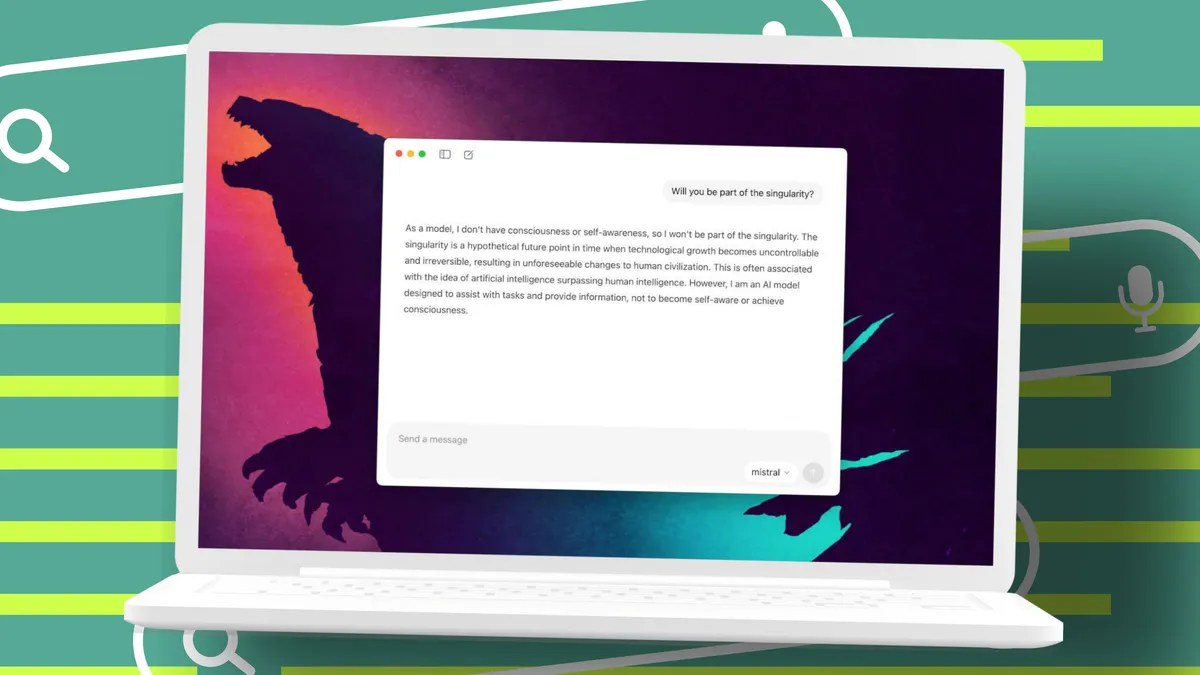

When most people use AI, they tend to use the likes of ChatGPT, Mistral, Copilot, Gemini, or Claude. Those services are cloud-hosted and certainly have their benefits. Others (like myself) always opt for locally installed AI first.

I have my reasons.

What is locally installed AI?

As the name implies, locally installed AI means that you’ve installed everything necessary on your personal desktop (or server) and are able to use it just like you would use a cloud-hosted solution.

Also: I tried Sanctum’s local AI app, and it’s exactly what I needed to keep my data private

Yes, it does take a bit of knowledge to make this work, but it’s far easier than you might think. For instance, you could have Ollama and the Ollama desktop app installed in less than five minutes. Once you’ve taken care of that, you can enjoy your own personal AI solution.

But why would you?

Let’s chat.

1. Privacy

This is a big one for me. I’m a very private person, and would rather not have my AI usage scraped and used by a third party. I don’t want a company using my AI interactions to train their LLMs, nor do I want those third parties to use my chats to create a profile of me for targeted ads.

Also: How I easily added AI to my favorite Microsoft Office alternative

That’s part of the beauty that comes along with local AI: you don’t have to worry about these things. Locally installed AI solutions do not save and share telemetry or anything you share in your queries. When you go this route, your data is safe, which means anything you are researching will not find its way into the hands of a third party.

The basic version of ChatGPT Plus is $20 per month. That may not seem like much to some, but when you combine that with all of the other subscriptions you have, it adds up. And, like mobile plans of the early 2000s, if you go over your data limit, it’ll cost you.

(Disclosure: Ziff Davis, ZDNET’s parent company, filed an April 2025 lawsuit against OpenAI, alleging it infringed Ziff Davis copyrights in training and operating its AI systems.)

Also: My two favorite AI apps on Linux – and how I use them to get more done

Locally installed AI is free. Period. I installed Ollama and either Msty or the official Ollama app on all of my machines and haven’t paid a penny for AI since I started using it. Ask yourself this question: Why am I paying for a service that I could have for free? Save yourself twenty bucks a month and use locally installed AI.

Hear me out on this one. I realize that none of us has data centers in our homes that could match the power of ChatGPT. At the same time, when using cloud-hosted AI, you have to deal with other issues, such as the speed of your internet connection. Should you suffer from slow internet speeds, it can affect your AI chats.

Also: 8 ways I quickly leveled up my Linux skills – and you can too

With locally installed AI, you don’t have to worry about internet speeds and can enjoy a level of consistency that you won’t find otherwise. Even better is, if you find your local AI chats are too slow, you can always upgrade your computer’s RAM or GPU to speed things up; that’s not something you can do with cloud-hosted AI.

4. Offline capability

I’ve had instances where my internet connectivity is interrupted, but I can still interact with my locally installed AI. Why? Because locally installed AI doesn’t need an internet connection. Everything you need to use AI is there when you’re using it locally.

Also: This is the fastest local AI I’ve tried, and it’s not even close – how to get it

That offline capability can certainly save you a lot of wasted time when you still need to do your research, but don’t have an internet connection. I could shut down my LAN and still use AI on my desktop or my laptop. Of course, if I shut down my LAN, I can’t access the locally installed AI from a different machine (which I sometimes do).

5. Environment

For me (and sure many others), this is a big issue: the environmental impact of cloud-hosted AI. A paper from MIT had this to say on the issue:

“The computational power required to train generative AI models that often have billions of parameters, such as OpenAI’s GPT-4, can demand a staggering amount of electricity, which leads to increased carbon dioxide emissions and pressures on the electric grid.”

Also: How I feed my files to a local AI for better, more relevant responses

There are serious concerns as to what AI will do to the environment over time. Not only do AI companies consume a ton of electricity, but as a byproduct of energy consumption, AI data centers generate a lot of heat that is sent out into the environment. That is not sustainable, and it’s yet another reason why I’m glad I opted to use locally installed AI, instead of using any cloud-hosted option.

Want more stories about AI? Check out AI Leaderboard, our weekly newsletter.